Cleaning up papers

|

Before Width: | Height: | Size: 14 KiB After Width: | Height: | Size: 14 KiB |

|

Before Width: | Height: | Size: 20 KiB After Width: | Height: | Size: 20 KiB |

|

Before Width: | Height: | Size: 27 KiB After Width: | Height: | Size: 27 KiB |

|

Before Width: | Height: | Size: 28 KiB After Width: | Height: | Size: 28 KiB |

3

papers/other_casper/.gitignore

vendored

@ -1,3 +0,0 @@

|

||||

discouragement.aux

|

||||

discouragement.log

|

||||

x.log

|

||||

|

Before Width: | Height: | Size: 16 KiB |

@ -1,11 +0,0 @@

|

||||

\relax

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {1}Principles}{1}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {2}Introduction, Protocol I}{2}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {3}Proof Sketch of Safety and Plausible Liveness}{4}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {4}Fork Choice Rule}{6}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {5}Dynamic Validator Sets}{7}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {6}Mass Crash Failure Recovery}{9}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {7}Conclusions}{10}}

|

||||

\bibstyle{abbrv}

|

||||

\bibdata{main}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {8}References}{11}}

|

||||

@ -1,260 +0,0 @@

|

||||

This is pdfTeX, Version 3.14159265-2.6-1.40.16 (TeX Live 2015/Debian) (preloaded format=pdflatex 2017.6.27) 20 AUG 2017 04:48

|

||||

entering extended mode

|

||||

restricted \write18 enabled.

|

||||

%&-line parsing enabled.

|

||||

**casper_basic_structure.tex

|

||||

(./casper_basic_structure.tex

|

||||

LaTeX2e <2016/02/01>

|

||||

Babel <3.9q> and hyphenation patterns for 3 language(s) loaded.

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/base/article.cls

|

||||

Document Class: article 2014/09/29 v1.4h Standard LaTeX document class

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/base/size12.clo

|

||||

File: size12.clo 2014/09/29 v1.4h Standard LaTeX file (size option)

|

||||

)

|

||||

\c@part=\count79

|

||||

\c@section=\count80

|

||||

\c@subsection=\count81

|

||||

\c@subsubsection=\count82

|

||||

\c@paragraph=\count83

|

||||

\c@subparagraph=\count84

|

||||

\c@figure=\count85

|

||||

\c@table=\count86

|

||||

\abovecaptionskip=\skip41

|

||||

\belowcaptionskip=\skip42

|

||||

\bibindent=\dimen102

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/graphics/graphicx.sty

|

||||

Package: graphicx 2014/10/28 v1.0g Enhanced LaTeX Graphics (DPC,SPQR)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/graphics/keyval.sty

|

||||

Package: keyval 2014/10/28 v1.15 key=value parser (DPC)

|

||||

\KV@toks@=\toks14

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/graphics/graphics.sty

|

||||

Package: graphics 2016/01/03 v1.0q Standard LaTeX Graphics (DPC,SPQR)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/graphics/trig.sty

|

||||

Package: trig 2016/01/03 v1.10 sin cos tan (DPC)

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/latexconfig/graphics.cfg

|

||||

File: graphics.cfg 2010/04/23 v1.9 graphics configuration of TeX Live

|

||||

)

|

||||

Package graphics Info: Driver file: pdftex.def on input line 95.

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/pdftex-def/pdftex.def

|

||||

File: pdftex.def 2011/05/27 v0.06d Graphics/color for pdfTeX

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/infwarerr.sty

|

||||

Package: infwarerr 2010/04/08 v1.3 Providing info/warning/error messages (HO)

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/ltxcmds.sty

|

||||

Package: ltxcmds 2011/11/09 v1.22 LaTeX kernel commands for general use (HO)

|

||||

)

|

||||

\Gread@gobject=\count87

|

||||

))

|

||||

\Gin@req@height=\dimen103

|

||||

\Gin@req@width=\dimen104

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/tools/tabularx.sty

|

||||

Package: tabularx 2014/10/28 v2.10 `tabularx' package (DPC)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/tools/array.sty

|

||||

Package: array 2014/10/28 v2.4c Tabular extension package (FMi)

|

||||

\col@sep=\dimen105

|

||||

\extrarowheight=\dimen106

|

||||

\NC@list=\toks15

|

||||

\extratabsurround=\skip43

|

||||

\backup@length=\skip44

|

||||

)

|

||||

\TX@col@width=\dimen107

|

||||

\TX@old@table=\dimen108

|

||||

\TX@old@col=\dimen109

|

||||

\TX@target=\dimen110

|

||||

\TX@delta=\dimen111

|

||||

\TX@cols=\count88

|

||||

\TX@ftn=\toks16

|

||||

)

|

||||

\c@definition=\count89

|

||||

|

||||

(./casper_basic_structure.aux)

|

||||

\openout1 = `casper_basic_structure.aux'.

|

||||

|

||||

LaTeX Font Info: Checking defaults for OML/cmm/m/it on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for T1/cmr/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for OT1/cmr/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for OMS/cmsy/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for OMX/cmex/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for U/cmr/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/context/base/supp-pdf.mkii

|

||||

[Loading MPS to PDF converter (version 2006.09.02).]

|

||||

\scratchcounter=\count90

|

||||

\scratchdimen=\dimen112

|

||||

\scratchbox=\box26

|

||||

\nofMPsegments=\count91

|

||||

\nofMParguments=\count92

|

||||

\everyMPshowfont=\toks17

|

||||

\MPscratchCnt=\count93

|

||||

\MPscratchDim=\dimen113

|

||||

\MPnumerator=\count94

|

||||

\makeMPintoPDFobject=\count95

|

||||

\everyMPtoPDFconversion=\toks18

|

||||

) (/usr/share/texlive/texmf-dist/tex/generic/oberdiek/pdftexcmds.sty

|

||||

Package: pdftexcmds 2011/11/29 v0.20 Utility functions of pdfTeX for LuaTeX (HO

|

||||

)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/ifluatex.sty

|

||||

Package: ifluatex 2010/03/01 v1.3 Provides the ifluatex switch (HO)

|

||||

Package ifluatex Info: LuaTeX not detected.

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/ifpdf.sty

|

||||

Package: ifpdf 2011/01/30 v2.3 Provides the ifpdf switch (HO)

|

||||

Package ifpdf Info: pdfTeX in PDF mode is detected.

|

||||

)

|

||||

Package pdftexcmds Info: LuaTeX not detected.

|

||||

Package pdftexcmds Info: \pdf@primitive is available.

|

||||

Package pdftexcmds Info: \pdf@ifprimitive is available.

|

||||

Package pdftexcmds Info: \pdfdraftmode found.

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/oberdiek/epstopdf-base.sty

|

||||

Package: epstopdf-base 2010/02/09 v2.5 Base part for package epstopdf

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/oberdiek/grfext.sty

|

||||

Package: grfext 2010/08/19 v1.1 Manage graphics extensions (HO)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/kvdefinekeys.sty

|

||||

Package: kvdefinekeys 2011/04/07 v1.3 Define keys (HO)

|

||||

))

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/oberdiek/kvoptions.sty

|

||||

Package: kvoptions 2011/06/30 v3.11 Key value format for package options (HO)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/kvsetkeys.sty

|

||||

Package: kvsetkeys 2012/04/25 v1.16 Key value parser (HO)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/etexcmds.sty

|

||||

Package: etexcmds 2011/02/16 v1.5 Avoid name clashes with e-TeX commands (HO)

|

||||

Package etexcmds Info: Could not find \expanded.

|

||||

(etexcmds) That can mean that you are not using pdfTeX 1.50 or

|

||||

(etexcmds) that some package has redefined \expanded.

|

||||

(etexcmds) In the latter case, load this package earlier.

|

||||

)))

|

||||

Package grfext Info: Graphics extension search list:

|

||||

(grfext) [.png,.pdf,.jpg,.mps,.jpeg,.jbig2,.jb2,.PNG,.PDF,.JPG,.JPE

|

||||

G,.JBIG2,.JB2,.eps]

|

||||

(grfext) \AppendGraphicsExtensions on input line 452.

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/latexconfig/epstopdf-sys.cfg

|

||||

File: epstopdf-sys.cfg 2010/07/13 v1.3 Configuration of (r)epstopdf for TeX Liv

|

||||

e

|

||||

))

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <14.4> on input line 14.

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <7> on input line 14.

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <12> on input line 22.

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <8> on input line 22.

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <6> on input line 22.

|

||||

|

||||

[1

|

||||

|

||||

{/var/lib/texmf/fonts/map/pdftex/updmap/pdftex.map}]

|

||||

<prepares_commits.png, id=14, 1201.288pt x 346.896pt>

|

||||

File: prepares_commits.png Graphic file (type png)

|

||||

|

||||

<use prepares_commits.png>

|

||||

Package pdftex.def Info: prepares_commits.png used on input line 30.

|

||||

(pdftex.def) Requested size: 401.50146pt x 115.93695pt.

|

||||

|

||||

Overfull \hbox (29.12628pt too wide) in paragraph at lines 30--31

|

||||

[][]

|

||||

[]

|

||||

|

||||

LaTeX Font Info: Try loading font information for OMS+cmr on input line 37.

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/base/omscmr.fd

|

||||

File: omscmr.fd 2014/09/29 v2.5h Standard LaTeX font definitions

|

||||

)

|

||||

LaTeX Font Info: Font shape `OMS/cmr/m/n' in size <12> not available

|

||||

(Font) Font shape `OMS/cmsy/m/n' tried instead on input line 37.

|

||||

[2 <./prepares_commits.png>] [3]

|

||||

Overfull \hbox (2.44592pt too wide) in paragraph at lines 57--58

|

||||

[]\OT1/cmr/m/n/12 This gives sub-stan-tial gains in im-ple-men-ta-tion sim-plic

|

||||

-ity, be-cause it means

|

||||

[]

|

||||

|

||||

|

||||

Overfull \hbox (1.48117pt too wide) in paragraph at lines 62--63

|

||||

[]\OT1/cmr/bx/n/12 NO[]DBL[]PREPARE\OT1/cmr/m/n/12 : a val-ida-tor can-not pre-

|

||||

pare two dif-fer-ent check-

|

||||

[]

|

||||

|

||||

[4] <conflicting_checkpoints.png, id=28, 634.37pt x 403.5075pt>

|

||||

File: conflicting_checkpoints.png Graphic file (type png)

|

||||

|

||||

<use conflicting_checkpoints.png>

|

||||

Package pdftex.def Info: conflicting_checkpoints.png used on input line 76.

|

||||

(pdftex.def) Requested size: 301.1261pt x 191.5449pt.

|

||||

[5 <./conflicting_checkpoints.png>]

|

||||

<fork_choice_rule.jpeg, id=33, 707.64375pt x 442.65375pt>

|

||||

File: fork_choice_rule.jpeg Graphic file (type jpg)

|

||||

|

||||

<use fork_choice_rule.jpeg>

|

||||

Package pdftex.def Info: fork_choice_rule.jpeg used on input line 88.

|

||||

(pdftex.def) Requested size: 401.50146pt x 251.1535pt.

|

||||

|

||||

Overfull \hbox (29.12628pt too wide) in paragraph at lines 88--89

|

||||

[][]

|

||||

[]

|

||||

|

||||

[6 <./fork_choice_rule.jpeg>] [7]

|

||||

<validator_set_misalignment.png, id=42, 422.57875pt x 283.0575pt>

|

||||

File: validator_set_misalignment.png Graphic file (type png)

|

||||

|

||||

<use validator_set_misalignment.png>

|

||||

Package pdftex.def Info: validator_set_misalignment.png used on input line 117.

|

||||

|

||||

(pdftex.def) Requested size: 250.93842pt x 168.09088pt.

|

||||

[8]

|

||||

<CommitsSync.png, id=46, 422.57875pt x 373.395pt>

|

||||

File: CommitsSync.png Graphic file (type png)

|

||||

<use CommitsSync.png>

|

||||

Package pdftex.def Info: CommitsSync.png used on input line 127.

|

||||

(pdftex.def) Requested size: 301.1261pt x 266.08658pt.

|

||||

|

||||

[9 <./validator_set_misalignment.png (PNG copy)>] [10 <./CommitsSync.png>]

|

||||

No file casper_basic_structure.bbl.

|

||||

[11] (./casper_basic_structure.aux) )

|

||||

Here is how much of TeX's memory you used:

|

||||

1637 strings out of 494953

|

||||

23600 string characters out of 6180977

|

||||

80571 words of memory out of 5000000

|

||||

4915 multiletter control sequences out of 15000+600000

|

||||

9456 words of font info for 33 fonts, out of 8000000 for 9000

|

||||

14 hyphenation exceptions out of 8191

|

||||

37i,6n,23p,1062b,181s stack positions out of 5000i,500n,10000p,200000b,80000s

|

||||

</usr/share/texlive/texmf-dist/fonts/type1

|

||||

/public/amsfonts/cm/cmbx10.pfb></usr/share/texlive/texmf-dist/fonts/type1/publi

|

||||

c/amsfonts/cm/cmbx12.pfb></usr/share/texlive/texmf-dist/fonts/type1/public/amsf

|

||||

onts/cm/cmmi12.pfb></usr/share/texlive/texmf-dist/fonts/type1/public/amsfonts/c

|

||||

m/cmmi8.pfb></usr/share/texlive/texmf-dist/fonts/type1/public/amsfonts/cm/cmr10

|

||||

.pfb></usr/share/texlive/texmf-dist/fonts/type1/public/amsfonts/cm/cmr12.pfb></

|

||||

usr/share/texlive/texmf-dist/fonts/type1/public/amsfonts/cm/cmr17.pfb></usr/sha

|

||||

re/texlive/texmf-dist/fonts/type1/public/amsfonts/cm/cmr8.pfb></usr/share/texli

|

||||

ve/texmf-dist/fonts/type1/public/amsfonts/cm/cmsy10.pfb></usr/share/texlive/tex

|

||||

mf-dist/fonts/type1/public/amsfonts/cm/cmsy8.pfb></usr/share/texlive/texmf-dist

|

||||

/fonts/type1/public/amsfonts/cm/cmti12.pfb>

|

||||

Output written on casper_basic_structure.pdf (11 pages, 360532 bytes).

|

||||

PDF statistics:

|

||||

93 PDF objects out of 1000 (max. 8388607)

|

||||

60 compressed objects within 1 object stream

|

||||

0 named destinations out of 1000 (max. 500000)

|

||||

26 words of extra memory for PDF output out of 10000 (max. 10000000)

|

||||

|

||||

@ -1,144 +0,0 @@

|

||||

\title{Casper the Friendly Finality Gadget: Basic Structure}

|

||||

\author{

|

||||

Vitalik Buterin \\

|

||||

Ethereum Foundation

|

||||

}

|

||||

\date{\today}

|

||||

|

||||

\documentclass[12pt]{article}

|

||||

\usepackage{graphicx}

|

||||

\usepackage{tabularx}

|

||||

\newtheorem{definition}{Definition}

|

||||

|

||||

\begin{document}

|

||||

\maketitle

|

||||

\begin{abstract}

|

||||

We give an introduction to the consensus algorithm details of Casper: the Friendly Finality Gadget, as an overlay on an existing proof of work blockchain such as Ethereum. Byzantine fault tolerance analysis is included, but economic incentive analysis is out of scope.

|

||||

\end{abstract}

|

||||

|

||||

\section{Principles}

|

||||

Casper the Friendly Finality Gadget is designed as an overlay that must be built on top of some kind of ``proposal mechanism'' - a mechanism which ``proposes'' blocks which the Casper mechanism can then set in stone by ``finalizing'' them. The Casper mechanism depends on the proposal mechanism for liveness, but not safety; that is, if the proposal mechanism is entirely corrupted and controlled by adversaries, then the adversaries can prevent Casper from finalizing any blocks, but cannot cause a safety failure in Casper; that is, they cannot force Casper to finalize two conflicting blocks.

|

||||

|

||||

The base mechanism is heavily inspired by partially synchronous systems such as Tendermine [cite] and PBFT [cite], and thus has $\frac{1}{3}$ Byzantine fault tolerance and is safe under asynchrony and dependent on the proposal mechanism for liveness. We later introduce a modification which increases Byzantine fault tolerance to $\frac{1}{2}$, with the proviso that attackers with size $\frac{1}{3} < x < \frac{1}{2}$ can delay new blocks being finalized by some period of time $D$ (think $D \approx$ 3 weeks), at the cost of a ``tradeoff synchrony assumption'' where fault tolerance decreases as network latency goes up, decreasing to potentially zero when network latency reaches $D$.

|

||||

|

||||

In the Casper Phase 1 implementation for Ethereum, the ``proposal mechanism'' is the existing proof of work chain, modified to have a greatly reduced block reward because the chain no longer relies as heavily on proof of work for security, and we describe how the Casper mechanism, and fork choice rule, can be ``overlaid'' onto the proof of work mechanism in order to add Casper's guarantees.

|

||||

|

||||

\section{Introduction, Protocol I}

|

||||

|

||||

In the Casper protocol, there exists a set of validators, and in each \textit{epoch} (see below) validators have the ability to send two kinds of messages: $$[PREPARE, epoch, hash, epoch_{source}, hash_{source}]$$ and $$[COMMIT, epoch, hash]$$

|

||||

|

||||

\includegraphics[width=400px]{prepares_commits.png}

|

||||

|

||||

An \textit{epoch} is a period of 100 blocks; epoch $n$ begins at block $n * 100$ and ends at block $n * 100 + 99$. A \textit{checkpoint for epoch $n$} is a block with number $n * 100 - 1$; in a smoothly running blockchain there will usually be only one checkpoint per epoch, but due to natural network latency or deliberate attacks there may be multiple competing checkpoints during some epochs. The \textit{parent checkpoint} of a checkpoint is the 100th ancestor of the checkpoint block, and an \textit{ancestor checkpoint} of a checkpoint is either the parent checkpoint, or an ancestor checkpoint of the parent checkpoint.

|

||||

|

||||

We define the \textit{ancestry hash} of a checkpoint as follows:

|

||||

|

||||

\begin{itemize}

|

||||

\item The ancestry hash of the implied ``genesis checkpoint'' of epoch 0 is thirty two zero bytes.

|

||||

\item The ancestry hash of any other checkpoint is the keccak256 hash of the ancestry hash of its parent checkpoint concatenated with the hash of the checkpoint.

|

||||

\end{itemize}

|

||||

|

||||

Ancestry hashes thus form a direct hash chain, and otherwise have a one-to-one correspondence with checkpoint hashes.

|

||||

|

||||

During epoch $n$, validators are expected to send prepare and commit messages specifying $n$ as their $epoch$, and the ancestry hash of some checkpoint for epoch $n$ as their $hash$. Prepare messages are expected to specify as $hash_{source}$ a checkpoint for any previous epoch which is an ancestor of the $hash$, and which is \textit{justified} (see below), and the $epoch_{source}$ is expected to be the epoch of that checkpoint.

|

||||

|

||||

Each validator has a \textit{deposit size}; when a validator joins their deposit size is equal to the number of coins that they deposited, and from there on each validator's deposit size rises and falls as the validator receives rewards and penalties. For the rest of this paper, when we say ``$\frac{2}{3}$ of validators", we are referring to a \textit{deposit-weighted} fraction; that is, a set of validators whose combined deposit size equals to at least $\frac{2}{3}$ of the total deposit size of the entire set of validators. We also use ``$\frac{2}{3}$ commits" as shorthand for ``commits from $\frac{2}{3}$ of validators". At first, we will consider the set of validators, and their deposit sizes, static, but in later sections we will introduce the notion of validator set changes.

|

||||

|

||||

If, during an epoch $e$, for some specific ancestry hash $h$, for any specific ($epoch_{source}, hash_{source}$ pair), there exist $\frac{2}{3}$ prepares of the form $$[PREPARE, e, h, epoch_{source}, hash_{source}]$$, then $h$ is considered \textit{justified}. If $\frac{2}{3}$ commits are sent of the form $$[COMMIT, e, h]$$ then $h$ is considered \textit{finalized}.

|

||||

|

||||

We also add the following requirements:

|

||||

|

||||

\begin{itemize}

|

||||

\item For a checkpoint to be finalized, it must be justified.

|

||||

\item For a checkpoint to be justified, the $hash_{source}$ used to justify it must itself be justified.

|

||||

\item Prepare and commit messages are only accepted as part of blocks; that is, for a client to see $\frac{2}{3}$ commits of some hash, they must receive a block such that in the chain terminating at that block $\frac{2}{3}$ commits for that hash have been included and processed.

|

||||

\end{itemize}

|

||||

|

||||

This gives substantial gains in implementation simplicity, because it means that we can now have a fork choice rule where the ``score'' of a block only depends on the block and its children, putting it into a similar category as more traditional PoW-based fork choice rules such as the longest chain rule and GHOST. However, this fork choice rule is also \textit{finality-bearing}: there exists a ``finality'' mechanism that has the property that (i) the fork choice rule always prefers finalized blocks over non-finalized competing blocks, and (ii) it is impossible for two incompatible checkpoints to be finalized unless at least $\frac{1}{3}$ of the validators violated a \textit{slashing condition} (see below).

|

||||

|

||||

There are two slashing conditions:

|

||||

|

||||

\begin{enumerate}

|

||||

\item \textbf{NO\_DBL\_PREPARE}: a validator cannot prepare two different checkpoints for the same epoch.

|

||||

\item \textbf{PREPARE\_COMMIT\_CONSISTENCY}: if a validator has made a commit with epoch $n$, they cannot make a prepare with $epoch > n$ and $epoch_{source} < n$.

|

||||

\end{enumerate}

|

||||

|

||||

Earlier versions of Casper had four slashing conditions, but we can reduce to two because of the three modifications above; they ensure that blocks will not register commits or prepares that violate the other two conditions.

|

||||

|

||||

\section{Proof Sketch of Safety and Plausible Liveness}

|

||||

|

||||

We give a proof sketch of two properties of this scheme: \textit{accountable safety} and \textit{plausible liveness}. Accountable safety means that two conflicting checkpoints cannot be finalized unless at least $\frac{1}{3}$ of validators violate a slashing condition. Honest validators will not violate slashing conditions, so this implies the usual Byzantine fault tolerance safety property, but expressing this in terms of slashing conditions means that we are actually proving a stronger claim: if two conflicting checkpoints get finalized, then at least $\frac{1}{3}$ of validators were malicious, \textit{and we know whom to blame, and so we can maximally penalize them in order to make such faults expensive}.

|

||||

|

||||

Plausible liveness means that it is always possible for $\frac{2}{3}$ of honest validators to finalize a new checkpoint, regardless of what previous events took place.

|

||||

|

||||

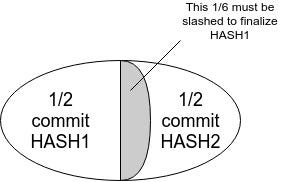

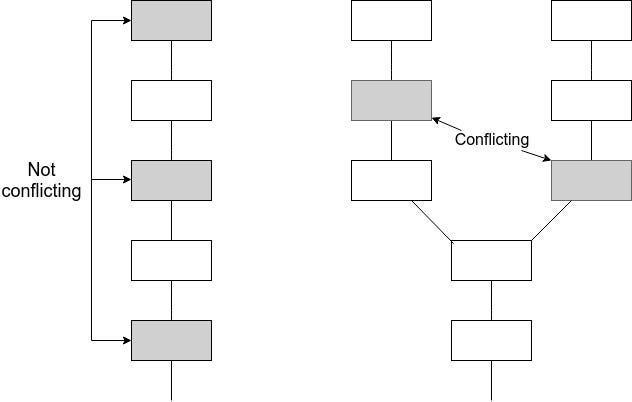

Suppose that two conflicting checkpoints $A$ (epoch $e_A$) and $B$ (epoch $e_B$) are finalized.

|

||||

|

||||

\includegraphics[width=300px]{conflicting_checkpoints.png}

|

||||

|

||||

This implies $\frac{2}{3}$ commits and $\frac{2}{3}$ prepares in epochs $e_A$ and $e_B$. In the trivial case where $e_A = e_B$, this implies that some intersection of $\frac{1}{3}$ of validators must have violated \textbf{NO\_DBL\_PREPARE}. In other cases, there must exist two chains $e_A > e_A^1 > e_A^2 > ... > G$ and $e_B > e_B^1 > e_B^2 > ... > G$ of justified checkpoints, both terminating at the genesis. Suppose without loss of generality that $e_A > e_B$. Then, there must be some $e_A^i$ that either $e_A^i = e_B$ or $e_A^i > e_B > e_A^{i+1}$. In the first case, since $A^i$ and $B$ both have $\frac{2}{3}$ prepares, at least $\frac{1}{3}$ of validators violated \textbf{NO\_DBL\_PREPARE}. Otherwise, $B$ has $\frac{2}{3}$ commits and there exist $\frac{2}{3}$ prepares with $epoch > B$ and $epoch_{source} < B$, so at least $\frac{1}{3}$ of validators violated \textbf{PREPARE\_COMMIT\_CONSISTENCY}. This proves accountable safety.

|

||||

|

||||

Now, we prove plausible liveness. Suppose that all existing validators have sent some sequence of prepare and commit messages. Let $M$ with epoch $e_M$ be the highest-epoch checkpoint that was justified. Honest validators have not committed on any block which is not justified. Hence, neither slashing condition stops them from making prepares on a child of $M$, using $e_M$ as $epoch_{source}$, and then committing this child.

|

||||

|

||||

\section{Fork Choice Rule}

|

||||

|

||||

The mechanism described above ensures \textit{plausible liveness}; however, it by itself does not ensure \textit{actual liveness} - that is, while the mechanism cannot get stuck in the strict sense, it could still enter a scenario where the proposal mechanism (i.e. the proof of work chain) gets into a state where it never ends up creating a checkpoint that could get finalized.

|

||||

|

||||

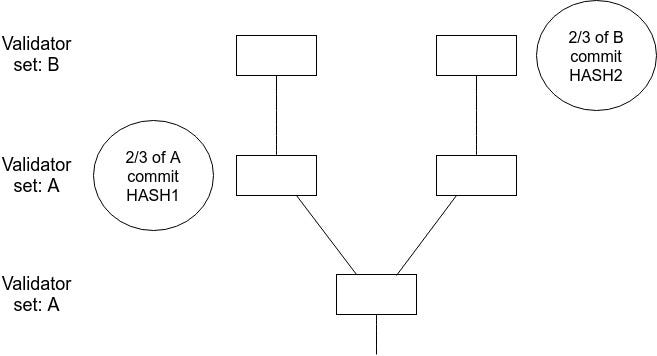

Here is one possible example:

|

||||

|

||||

\includegraphics[width=400px]{fork_choice_rule.jpeg}

|

||||

|

||||

In this case, $HASH1$ or any descendant thereof cannot be finalized without slashing $\frac{1}{6}$ of validators. However, miners on a proof of work chain would interpret $HASH1$ as the head and forever keep mining descendants of it, ignoring the chain based on $HASH0'$ which actually could get finalized.

|

||||

|

||||

In fact, when \textit{any} checkpoint gets $k > \frac{1}{3}$ commits, no conflicting checkpoint can get finalized without $k - \frac{1}{3}$ of validators getting slashed. This necessitates modifying the fork choice rule used by participants in the underlying proposal mechanism (as well as users and validators): instead of blindly following a longest-chain rule, there needs to be an overriding rule that (i) finalized checkpoints are favored, and (ii) when there are no further finalized checkpoints, checkpoints with more (justified) commits are favored.

|

||||

|

||||

One complete description of such a rule would be:

|

||||

|

||||

\begin{enumerate}

|

||||

\item Start with HEAD equal to the genesis of the chain.

|

||||

\item Select the descendant checkpoint of HEAD with the most commits (only justified checkpoints are admissible)

|

||||

\item Repeat (2) until no descendant with commits exists.

|

||||

\item Choose the longest proof of work chain from there.

|

||||

\end{enumerate}

|

||||

|

||||

The commit-following part of this rule can be viewed in some ways as mirroring the "greegy heaviest observed subtree" (GHOST) rule that has been proposed for proof of work chains [cite]. The symmetry is as follows. In GHOST, a node starts with the head at the genesis, then begins to move forward down the chain, and if it encounters a block with multiple children then it chooses the child that has the larger quantity of work built on top of it (including the child block itself and its descendants).

|

||||

|

||||

In this algorithm, we follow a similar approach, except we repeatedly seek the child that comes the closest to achieving finality. Commits on a descendant are implicitly commits on all of its ancestors, and so if a given descendant of a given block has more commits than any other descendant, then we know that all children along the chain from the head to this descendant are closer to finality than any of their siblings; hence, looking for the \textit{descendant} with the most commits and not just the \textit{child} replicates the GHOST principle most faithfully. Finalizing a checkpoint requires $\frac{2}{3}$ commits within a \textit{single} epoch, and so we do not try to sum up commits across epochs and instead simply take the maximum.

|

||||

|

||||

This rule ensures that if there is a checkpoint such that no conflicting checkpoint can be finalized without at least some validators violating slashing conditions, then this is the checkpoint that will be viewed as the ``head'' and thus that validators will try to commit on.

|

||||

|

||||

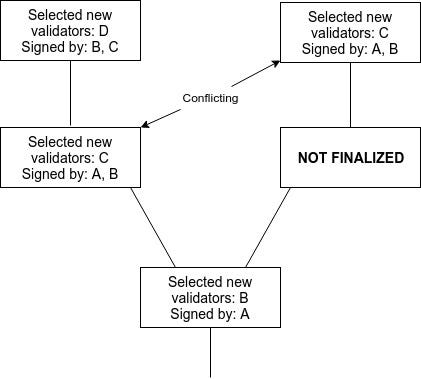

\section{Dynamic Validator Sets}

|

||||

|

||||

In an open protocol, the validator set needs to be able to change; old validators need to be able to withdraw, and new validators need to be able to enter. To accomplish this end, we define a variable kept track of in the state called the \textit{dynasty} counter. When a user sends a ``deposit'' transaction to become a validator, if this transaction is included in dynasty $n$, then the validator will be \textit{inducted} in dynasty $n+2$. The dynasty counter is incremented when the chain detects that the checkpoint of the current epoch that is part of its own history has been finalized (that is, the checkpoint of epoch $e$ must be finalized during epoch $e$, and the chain must learn about this before epoch $e$ ends). In simpler terms, when a user sends a ``deposit'' transaction, they need to wait for the transaction to be finalized, and then they need to wait again for that epoch to be finalized; after this, they become part of the validator set. We call such a validator's \textit{start dynasty} $n+2$.

|

||||

|

||||

For a validator to leave, they must send a ``withdraw'' message. If their withdraw message gets included during dynasty $n$, the validator similarly leaves the validator set during dynasty $n+2$; we call $n+2$ their \textit{end dynasty}. When a validator withdraws, their deposit is locked for four months before they can take their money out; if they are caught violating a slashing condition within that time then their deposit is forfeited.

|

||||

|

||||

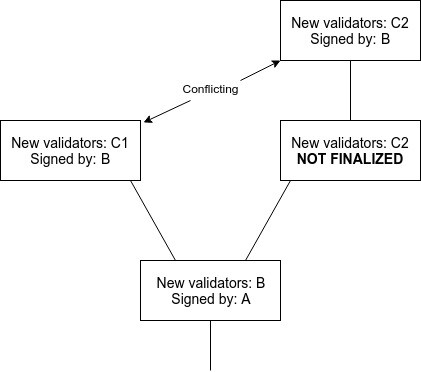

For a checkpoint to be justified, it must be prepared by a set of validators which contains (i) at least $\frac{2}{3}$ of the current dynasty (that is, validators with $startDynasty \le curDynasty < endDynasty$), and (ii) at least $\frac{2}{3}$ of the previous dynasty (that is, validators with $startDynasty \le curDynasty - 1 < endDynasty$. Finalization with commits works similarly. The current and previous dynasties will usually mostly overlap; but in cases where they substantially diverge this ``stitching'' mechanism ensures that dynasty divergences do not lead to situations where a finality reversion or other failure can happen because different messages are signed by different validator sets and so equivocation is avoided.

|

||||

|

||||

\includegraphics[width=250px]{validator_set_misalignment.png}

|

||||

|

||||

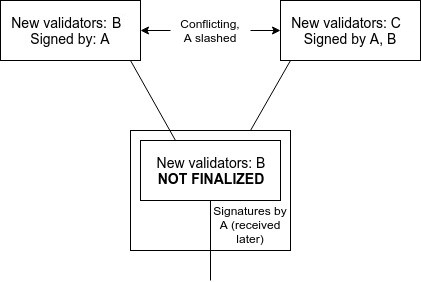

\section{Mass Crash Failure Recovery}

|

||||

|

||||

Suppose that more than one third of validators crash-fail at the same time; that is, they either are no longer connected to the network due to a network partition, or their computers fail, or they do this as a malicious attack. Then, no checkpoint will be able to get finalized.

|

||||

|

||||

We can recover from this by instituting a rule that validators who do not prepare or commit for a long time start to see their deposit sizes decrease (depending on the desired economic incentives this can be either a compulsory partial withdrawal or an outright confiscation), until eventually their deposit sizes decrease low enough that the validators that \textit{are} preparing and committing are once again a $\frac{2}{3}$ supermajority.

|

||||

|

||||

Note that this does introduce the possibility of two conflicting checkpoints being finalized, with validators only losing money on one of the two checkpoints:

|

||||

|

||||

\includegraphics[width=300px]{CommitsSync.png}

|

||||

|

||||

If the goal is simply to achieve maximally close to 50\% fault tolerance, then clients should simply favor the finalized checkpoint that they received earlier. However, if clients are also interested in defeating 51\% censorship attacks, then they may want to at least sometimes choose the minority chain. All forms of ``51\% attacks'' can thus be resolved fairly cleanly via ``user-activated soft forks'' that reject what would normally be the dominant chain. Particularly, note that finalizing even one block on the dominant chain precludes the attacking validators from preparing on the minority chain because of \textbf{PREPARE\_COMMIT\_CONSISTENCY}, at least until their balances decrease to the point where the minority can commit, so such a fork would also serve the function of costing the majority attacker a very large portion of their deposits.

|

||||

|

||||

\section{Conclusions}

|

||||

|

||||

This introduces the basic workings of Casper the Friendly Finality Gadget's prepare and commit mechanism and fork choice rule, in the context of Byzantine fault tolerance analysis. Separate papers will serve the role of explaining and analyzing incentives inside of Casper, and the different ways that they can be parametrized and the consequences of these paramtrizations.

|

||||

|

||||

\section{References}

|

||||

\bibliographystyle{abbrv}

|

||||

\bibliography{main}

|

||||

\begin{itemize}

|

||||

\item Aviv Zohar and Yonatan Sompolinsky, ``Fast Money Grows on Trees, not Chains'': https://eprint.iacr.org/2013/881.pdf

|

||||

\item Jae Kwon, ``Tendermint'': http://tendermint.org/tendermint.pdf

|

||||

\item Miguel Castro and Barbara Liskov, ``Practical Byzantine Fault Tolerance'': http://pmg.csail.mit.edu/papers/osdi99.pdf

|

||||

\end{itemize}

|

||||

|

||||

\end{document}

|

||||

@ -1,12 +0,0 @@

|

||||

\relax

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {1}Introduction}{1}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {2}Rewards and Penalties}{3}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {3}Claims}{5}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {4}Individual choice analysis}{5}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {5}Collective choice model}{6}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {6}Griefing factor analysis}{7}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {7}Pools}{10}}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {8}Conclusions}{11}}

|

||||

\bibstyle{abbrv}

|

||||

\bibdata{main}

|

||||

\@writefile{toc}{\contentsline {section}{\numberline {9}References}{12}}

|

||||

@ -1,242 +0,0 @@

|

||||

This is pdfTeX, Version 3.14159265-2.6-1.40.16 (TeX Live 2015/Debian) (preloaded format=pdflatex 2017.6.27) 25 JUL 2017 00:09

|

||||

entering extended mode

|

||||

restricted \write18 enabled.

|

||||

%&-line parsing enabled.

|

||||

**casper_economics_basic.tex

|

||||

(./casper_economics_basic.tex

|

||||

LaTeX2e <2016/02/01>

|

||||

Babel <3.9q> and hyphenation patterns for 3 language(s) loaded.

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/base/article.cls

|

||||

Document Class: article 2014/09/29 v1.4h Standard LaTeX document class

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/base/size12.clo

|

||||

File: size12.clo 2014/09/29 v1.4h Standard LaTeX file (size option)

|

||||

)

|

||||

\c@part=\count79

|

||||

\c@section=\count80

|

||||

\c@subsection=\count81

|

||||

\c@subsubsection=\count82

|

||||

\c@paragraph=\count83

|

||||

\c@subparagraph=\count84

|

||||

\c@figure=\count85

|

||||

\c@table=\count86

|

||||

\abovecaptionskip=\skip41

|

||||

\belowcaptionskip=\skip42

|

||||

\bibindent=\dimen102

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/graphics/graphicx.sty

|

||||

Package: graphicx 2014/10/28 v1.0g Enhanced LaTeX Graphics (DPC,SPQR)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/graphics/keyval.sty

|

||||

Package: keyval 2014/10/28 v1.15 key=value parser (DPC)

|

||||

\KV@toks@=\toks14

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/graphics/graphics.sty

|

||||

Package: graphics 2016/01/03 v1.0q Standard LaTeX Graphics (DPC,SPQR)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/graphics/trig.sty

|

||||

Package: trig 2016/01/03 v1.10 sin cos tan (DPC)

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/latexconfig/graphics.cfg

|

||||

File: graphics.cfg 2010/04/23 v1.9 graphics configuration of TeX Live

|

||||

)

|

||||

Package graphics Info: Driver file: pdftex.def on input line 95.

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/pdftex-def/pdftex.def

|

||||

File: pdftex.def 2011/05/27 v0.06d Graphics/color for pdfTeX

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/infwarerr.sty

|

||||

Package: infwarerr 2010/04/08 v1.3 Providing info/warning/error messages (HO)

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/ltxcmds.sty

|

||||

Package: ltxcmds 2011/11/09 v1.22 LaTeX kernel commands for general use (HO)

|

||||

)

|

||||

\Gread@gobject=\count87

|

||||

))

|

||||

\Gin@req@height=\dimen103

|

||||

\Gin@req@width=\dimen104

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/tools/tabularx.sty

|

||||

Package: tabularx 2014/10/28 v2.10 `tabularx' package (DPC)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/tools/array.sty

|

||||

Package: array 2014/10/28 v2.4c Tabular extension package (FMi)

|

||||

\col@sep=\dimen105

|

||||

\extrarowheight=\dimen106

|

||||

\NC@list=\toks15

|

||||

\extratabsurround=\skip43

|

||||

\backup@length=\skip44

|

||||

)

|

||||

\TX@col@width=\dimen107

|

||||

\TX@old@table=\dimen108

|

||||

\TX@old@col=\dimen109

|

||||

\TX@target=\dimen110

|

||||

\TX@delta=\dimen111

|

||||

\TX@cols=\count88

|

||||

\TX@ftn=\toks16

|

||||

)

|

||||

\c@definition=\count89

|

||||

|

||||

(./casper_economics_basic.aux)

|

||||

\openout1 = `casper_economics_basic.aux'.

|

||||

|

||||

LaTeX Font Info: Checking defaults for OML/cmm/m/it on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for T1/cmr/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for OT1/cmr/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for OMS/cmsy/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for OMX/cmex/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

LaTeX Font Info: Checking defaults for U/cmr/m/n on input line 13.

|

||||

LaTeX Font Info: ... okay on input line 13.

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/context/base/supp-pdf.mkii

|

||||

[Loading MPS to PDF converter (version 2006.09.02).]

|

||||

\scratchcounter=\count90

|

||||

\scratchdimen=\dimen112

|

||||

\scratchbox=\box26

|

||||

\nofMPsegments=\count91

|

||||

\nofMParguments=\count92

|

||||

\everyMPshowfont=\toks17

|

||||

\MPscratchCnt=\count93

|

||||

\MPscratchDim=\dimen113

|

||||

\MPnumerator=\count94

|

||||

\makeMPintoPDFobject=\count95

|

||||

\everyMPtoPDFconversion=\toks18

|

||||

) (/usr/share/texlive/texmf-dist/tex/generic/oberdiek/pdftexcmds.sty

|

||||

Package: pdftexcmds 2011/11/29 v0.20 Utility functions of pdfTeX for LuaTeX (HO

|

||||

)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/ifluatex.sty

|

||||

Package: ifluatex 2010/03/01 v1.3 Provides the ifluatex switch (HO)

|

||||

Package ifluatex Info: LuaTeX not detected.

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/ifpdf.sty

|

||||

Package: ifpdf 2011/01/30 v2.3 Provides the ifpdf switch (HO)

|

||||

Package ifpdf Info: pdfTeX in PDF mode is detected.

|

||||

)

|

||||

Package pdftexcmds Info: LuaTeX not detected.

|

||||

Package pdftexcmds Info: \pdf@primitive is available.

|

||||

Package pdftexcmds Info: \pdf@ifprimitive is available.

|

||||

Package pdftexcmds Info: \pdfdraftmode found.

|

||||

)

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/oberdiek/epstopdf-base.sty

|

||||

Package: epstopdf-base 2010/02/09 v2.5 Base part for package epstopdf

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/oberdiek/grfext.sty

|

||||

Package: grfext 2010/08/19 v1.1 Manage graphics extensions (HO)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/kvdefinekeys.sty

|

||||

Package: kvdefinekeys 2011/04/07 v1.3 Define keys (HO)

|

||||

))

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/oberdiek/kvoptions.sty

|

||||

Package: kvoptions 2011/06/30 v3.11 Key value format for package options (HO)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/kvsetkeys.sty

|

||||

Package: kvsetkeys 2012/04/25 v1.16 Key value parser (HO)

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/generic/oberdiek/etexcmds.sty

|

||||

Package: etexcmds 2011/02/16 v1.5 Avoid name clashes with e-TeX commands (HO)

|

||||

Package etexcmds Info: Could not find \expanded.

|

||||

(etexcmds) That can mean that you are not using pdfTeX 1.50 or

|

||||

(etexcmds) that some package has redefined \expanded.

|

||||

(etexcmds) In the latter case, load this package earlier.

|

||||

)))

|

||||

Package grfext Info: Graphics extension search list:

|

||||

(grfext) [.png,.pdf,.jpg,.mps,.jpeg,.jbig2,.jb2,.PNG,.PDF,.JPG,.JPE

|

||||

G,.JBIG2,.JB2,.eps]

|

||||

(grfext) \AppendGraphicsExtensions on input line 452.

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/latexconfig/epstopdf-sys.cfg

|

||||

File: epstopdf-sys.cfg 2010/07/13 v1.3 Configuration of (r)epstopdf for TeX Liv

|

||||

e

|

||||

))

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <14.4> on input line 14.

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <7> on input line 14.

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <10.95> on input line 16.

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <8> on input line 16.

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <6> on input line 16.

|

||||

|

||||

Overfull \hbox (0.3523pt too wide) in paragraph at lines 16--17

|

||||

[]\OT1/cmr/m/n/10.95 We give an in-tro-duc-tion to the in-cen-tives in the Casp

|

||||

er the Friendly

|

||||

[]

|

||||

|

||||

LaTeX Font Info: External font `cmex10' loaded for size

|

||||

(Font) <12> on input line 20.

|

||||

[1

|

||||

|

||||

{/var/lib/texmf/fonts/map/pdftex/updmap/pdftex.map}]

|

||||

LaTeX Font Info: Try loading font information for OMS+cmr on input line 29.

|

||||

|

||||

(/usr/share/texlive/texmf-dist/tex/latex/base/omscmr.fd

|

||||

File: omscmr.fd 2014/09/29 v2.5h Standard LaTeX font definitions

|

||||

)

|

||||

LaTeX Font Info: Font shape `OMS/cmr/m/n' in size <12> not available

|

||||

(Font) Font shape `OMS/cmsy/m/n' tried instead on input line 29.

|

||||

[2] [3] [4]

|

||||

Overfull \hbox (10.7546pt too wide) in paragraph at lines 80--81

|

||||

[]\OT1/cmr/m/n/12 Hence, the PRE-PARE[]COMMIT[]CONSISTENCY slash-ing con-di-tio

|

||||

n poses

|

||||

[]

|

||||

|

||||

|

||||

Overfull \hbox (5.39354pt too wide) in paragraph at lines 82--83

|

||||

[]\OT1/cmr/m/n/12 We are as-sum-ing that there are $[]$ pre-pares for $(\OML/cm

|

||||

m/m/it/12 e; H; epoch[]; hash[]\OT1/cmr/m/n/12 )$,

|

||||

[]

|

||||

|

||||

[5] [6]

|

||||

Overfull \hbox (15.85455pt too wide) in paragraph at lines 105--105

|

||||

[]\OT1/cmr/m/n/12 Now, we need to show that, for any given to-tal de-posit size

|

||||

, $[]$

|

||||

[]

|

||||

|

||||

[7]

|

||||

Underfull \hbox (badness 3291) in paragraph at lines 147--147

|

||||

[]|\OT1/cmr/m/n/12 Amount lost by at-

|

||||

[]

|

||||

|

||||

|

||||

Overfull \hbox (17.62482pt too wide) in paragraph at lines 147--148

|

||||

[][]

|

||||

[]

|

||||

|

||||

[8] [9] [10] [11]

|

||||

No file casper_economics_basic.bbl.

|

||||

[12] (./casper_economics_basic.aux) )

|

||||

Here is how much of TeX's memory you used:

|

||||

1613 strings out of 494953

|

||||

22986 string characters out of 6180977

|

||||

84568 words of memory out of 5000000

|

||||

4898 multiletter control sequences out of 15000+600000

|

||||

10072 words of font info for 35 fonts, out of 8000000 for 9000

|

||||

14 hyphenation exceptions out of 8191

|

||||

37i,10n,23p,1059b,181s stack positions out of 5000i,500n,10000p,200000b,80000s

|

||||

</usr/share/texlive/texmf-dist/fonts/type1

|

||||

/public/amsfonts/cm/cmbx10.pfb></usr/share/texlive/texmf-dist/fonts/type1/publi

|

||||

c/amsfonts/cm/cmbx12.pfb></usr/share/texlive/texmf-dist/fonts/type1/public/amsf

|

||||

onts/cm/cmex10.pfb></usr/share/texlive/texmf-dist/fonts/type1/public/amsfonts/c

|

||||

m/cmmi12.pfb></usr/share/texlive/texmf-dist/fonts/type1/public/amsfonts/cm/cmmi

|

||||

6.pfb></usr/share/texlive/texmf-dist/fonts/type1/public/amsfonts/cm/cmmi8.pfb><

|

||||

/usr/share/texlive/texmf-dist/fonts/type1/public/amsfonts/cm/cmr10.pfb></usr/sh

|

||||

are/texlive/texmf-dist/fonts/type1/public/amsfonts/cm/cmr12.pfb></usr/share/tex

|

||||

live/texmf-dist/fonts/type1/public/amsfonts/cm/cmr17.pfb></usr/share/texlive/te

|

||||

xmf-dist/fonts/type1/public/amsfonts/cm/cmr8.pfb></usr/share/texlive/texmf-dist

|

||||

/fonts/type1/public/amsfonts/cm/cmsy10.pfb></usr/share/texlive/texmf-dist/fonts

|

||||

/type1/public/amsfonts/cm/cmsy8.pfb></usr/share/texlive/texmf-dist/fonts/type1/

|

||||

public/amsfonts/cm/cmti12.pfb>

|

||||

Output written on casper_economics_basic.pdf (12 pages, 181449 bytes).

|

||||

PDF statistics:

|

||||

95 PDF objects out of 1000 (max. 8388607)

|

||||

67 compressed objects within 1 object stream

|

||||

0 named destinations out of 1000 (max. 500000)

|

||||

1 words of extra memory for PDF output out of 10000 (max. 10000000)

|

||||

|

||||

@ -1,201 +0,0 @@

|

||||

\title{Incentives in Casper the Friendly Finality Gadget}

|

||||

\author{

|

||||

Vitalik Buterin \\

|

||||

Ethereum Foundation

|

||||

}

|

||||

\date{\today}

|

||||

|

||||

\documentclass[12pt]{article}

|

||||

\usepackage{graphicx}

|

||||

\usepackage{tabularx}

|

||||

\newtheorem{definition}{Definition}

|

||||

|

||||

\begin{document}

|

||||

\maketitle

|

||||

\begin{abstract}

|

||||

We give an introduction to the incentives in the Casper the Friendly Finality Gadget protocol, and show how the protocol behaves under individual choice analysis, collective choice analysis and griefing factor analysis. We define a ``protocol utility function'' that represents the protocol's view of how well it is being executed, and show the connection between the incentive structure that we present and the utility function. We show that (i) the protocol is a Nash equilibrium assuming any individual validator's deposit makes up less than $\frac{1}{3}$ of the total, (ii) in a collective choice model, where all validators are controlled by one actor, harming protocol utility hurts the cartel's revenue, and there is an upper bound on the ratio between the reduction in protocol utility from an attack and the cost to the attacker, and (iii) the griefing factor can be bounded above by $1$, though we will prefer an alternative model that bounds the griefing factor at $2$ in exchange for other benefits.

|

||||

\end{abstract}

|

||||

|

||||

\section{Introduction}

|

||||

In the Casper protocol, there is a set of validators, and in each epoch validators have the ability to send two kinds of messages: $$[PREPARE, epoch, hash, epoch_{source}, hash_{source}]$$ and $$[COMMIT, epoch, hash]$$

|

||||

|

||||

Each validator has a \textit{deposit size}; when a validator joins their deposit size is equal to the number of coins that they deposited, and from there on each validator's deposit size rises and falls as the validator receives rewards and penalties. For the rest of this paper, when we say ``$\frac{2}{3}$ of validators", we are referring to a \textit{deposit-weighted} fraction; that is, a set of validators whose combined deposit size equals to at least $\frac{2}{3}$ of the total deposit size of the entire set of validators. We also use ``$\frac{2}{3}$ commits" as shorthand for ``commits from $\frac{2}{3}$ of validators".

|

||||

|

||||

If, during an epoch $e$, for some specific checkpoint hash $h$, $\frac{2}{3}$ prepares are sent of the form $$[PREPARE, e, h, epoch_{source}, hash_{source}]$$ with some specific $epoch_{source}$ and some specific $hash_{source}$, then $h$ is considered \textit{justified}. If $\frac{2}{3}$ commits are sent of the form $$[COMMIT, e, h]$$ then $h$ is considered \textit{finalized}. The $hash$ is the block hash of the block at the start of the epoch, so a $hash$ being finalized means that that block, and all of its ancestors, are also finalized. An ``ideal execution'' of the protocol is one where, during every epoch, every validator prepares and commits some block hash at the start of that epoch, specifying the same $epoch_{source}$ and $hash_{source}$. We want to try to create incentives to encourage this ideal execution.

|

||||

|

||||

Possible deviations from this ideal execution that we want to minimize or avoid include:

|

||||

|

||||

\begin{itemize}

|

||||

\item Any of the four slashing conditions get violated.

|

||||

\item During some epoch, we do not get $\frac{2}{3}$ commits for the $hash$ that received $\frac{2}{3}$ prepares.

|

||||

\item During some epoch, we do not get $\frac{2}{3}$ prepares for the same \\ $(h, hash_{source}, epoch_{source})$ combination.

|

||||

\end{itemize}

|

||||

|

||||

From within the view of the blockchain, we only see the blockchain's own history, including messages that were passed in. In a history that contains some blockhash $H$, our strategy will be to reward validators who prepared and committed $H$, and not reward prepares or commits for any hash $H\prime \ne H$. The blockchain state will also keep track of the most recent hash in its own history that received $\frac{2}{3}$ prepares, and only reward prepares whose $epoch_{source}$ and $hash_{source}$ point to this hash. These two techniques will help to ``coordinate'' validators toward preparing and committing a single hash with a single source, as required by the protocol.

|

||||

|

||||

\section{Rewards and Penalties}

|

||||

|

||||

We define the following constants and functions:

|

||||

|

||||

\begin{itemize}

|

||||

\item $BIR(D)$: determines the base interest rate paid to each validator, taking as an input the current total quantity of deposited ether.

|

||||

\item $BP(D, e, LFE)$: determines the ``base penalty constant'' - a value expressed as a percentage rate that is used as the ``scaling factor'' for all penalties; for example, if at the current time $BP(...) = 0.001$, then a penalty of size $1.5$ means a validator loses $0.15\%$ of their deposit. Takes as inputs the current total quantity of deposited ether $D$, the current epoch $e$ and the last finalized epoch $LFE$. Note that in a ``perfect'' protocol execution, $e - LFE$ always equals $1$.

|

||||

\item $NCP$ (``non-commit penalty''): the penalty for not committing, if there was a justified hash which the validator \textit{could} have committed

|

||||

\item $NCCP(\alpha)$ (``non-commit collective penalty''): if $\alpha$ of validators are not seen to have committed during an epoch, and that epoch had a justified hash so any validator \textit{could} have committed, then all validators are charged a penalty proportional to $NCCP(\alpha)$. Must be monotonically increasing, and satisfy $NCCP(0) = 0$.

|

||||

\item $NPP$ (``non-prepare penalty''): the penalty for not preparing

|

||||

\item $NPCP(\alpha)$ (``non-prepare collective penalty''): if $\alpha$ of validators are not seen to have prepared during an epoch, then all validators are charged a penalty proportional to $NCCP(\alpha)$. Must be monotonically increasing, and satisfy $NPCP(0) = 0$.

|

||||

\end{itemize}

|

||||

|

||||

Note that preparing and committing does not guarantee that the validator will not incur $NPP$ and $NCP$; it could be the case that either because of very high network latency or a malicious majority censorship attack, the prepares and commits are not included into the blockchain in time and so the incentivization mechanism does not know about them. For $NPCP$ and $NCCP$ similarly, the $\alpha$ input is the portion of validators whose prepares and commits are \textit{included}, not the portion of validators who \textit{tried to send} prepares and commits.

|

||||

|

||||

When we talk about preparing and committing the ``correct value", we are referring to the $hash$ and $epoch_{source}$ and $hash_{source}$ recommended by the protocol state, as described above.

|

||||

|

||||

We now define the following reward and penalty schedule, which runs every epoch.

|

||||

|

||||

\begin{itemize}

|

||||

\item Let $D$ be the current total quantity of deposited ether, and $e - LFE$ be the number of epochs since the last finalized epoch.

|

||||

\item All validators get a reward of $BIR(D)$ every epoch (eg. if $BIR(D) = 0.0002$ then a validator with $10000$ coins deposited gets a per-epoch reward of $2$ coins)

|

||||

\item If the protocol does not see a prepare from a given validator during the given epoch, they are penalized $BP(D, e, LFE) * NPP$

|

||||

\item If the protocol saw prepares from portion $p_p$ validators during the given epoch, \textit{every} validator is penalized $BP(D, e, LFE) * NPCP(1 - p_p)$

|

||||

\item If the protocol does not see a commit from a given validator during the given epoch, and a prepare was justified so a commit \textit{could have} been seen, they are penalized $BP(D, E, LFE) * NCP$.

|

||||

\item If the protocol saw commits from portion $p_c$ validators during the given epoch, and a prepare was justified so any validator \textit{could have} committed, then \textit{every} validator is penalized $BP(D, e, LFE) * NCCP(1 - p_p)$

|

||||

\end{itemize}

|

||||

|

||||

This is the entirety of the incentivization structure, though without functions and constants defined; we will define these later, attempting as much as possible to derive the specific values from desired objectives and first principles. For now we will only say that all constants are positive and all functions output non-negative values for any input within their range. Additionally, $NPCP(0) = NCCP(0) = 0$ and $NPCP$ and $NCCP$ must both be nondecreasing.

|

||||

|

||||

\section{Claims}

|

||||

|

||||

We seek to prove the following:

|

||||

|

||||

\begin{itemize}

|

||||

\item If each validator has less than $\frac{1}{3}$ of total deposits, then preparing and committing the value suggested by the proposal mechanism is a Nash equilibrium.

|

||||

\item Even if all validators collude, the ratio between the harm incurred by the protocol and the penalties paid by validators is bounded above by some constant. Note that this requires a measure of ``harm incurred by the protocol"; we will discuss this in more detail later.

|

||||

\item The \textit{griefing factor}, the ratio between penalties incurred by validators who are victims of an attack and penalties incurred by the validators that carried out the attack, can be bounded above by 2, even in the case where the attacker holds a majority of the total deposits.

|

||||

\end{itemize}

|

||||

|

||||

\section{Individual choice analysis}

|

||||

|

||||

The individual choice analysis is simple. Suppose that the proposal mechanism selects a hash $H$ to prepare for epoch $e$, and the Casper incentivization mechanism specifies some $epoch_{source}$ and $hash_{source}$. Because, as per definition of the Nash equilibrium, we are assuming that all validators except for one particular validator that we are analyzing is following the equilibrium strategy, we know that $\ge \frac{2}{3}$ of validators prepared in the last epoch and so $epoch_{source} = e - 1$, and $hash_{source}$ is the direct parent of $H$.

|

||||

|

||||

Hence, the PREPARE\_COMMIT\_CONSISTENCY slashing condition poses no barrier to preparing $(e, H, epoch_{source}, hash_{source})$. Since, in epoch $e$, we are assuming that all other validators \textit{will} prepare these values and then commit $H$, we know $H$ will be the hash in the main chain, and so a validator will pay a penalty proportional to $NPP$ (plus a further penalty from their marginal contribution to the $NPCP$ penalty) if they do not prepare $(e, H, epoch_{source}, hash_{source})$, and they can avoid this penalty if they do prepare these values.

|

||||

|

||||

We are assuming that there are $\frac{2}{3}$ prepares for $(e, H, epoch_{source}, hash_{source})$, and so PREPARE\_REQ poses no barrier to committing $H$. Committing $H$ allows a validator to avoid $NCP$ (as well as their marginal contribution to $NCCP$). Hence, there is an economic incentive to commit $H$. This shows that, if the proposal mechanism succeeds at presenting to validators a single primary choice, preparing and committing the value selected by the proposal mechanism is a Nash equilibrium.

|

||||

|

||||

\section{Collective choice model}

|

||||

|

||||

To model the protocol in a collective-choice context, we will first define a \textit{protocol utility function}. The protocol utility function defines ``how well the protocol execution is doing". The protocol utility function cannot be derived mathematically; it can only be conceived and justified intuitively.

|

||||

|

||||

Our protocol utility function is:

|

||||

|

||||

$$U = \sum_{e = 0}^{e_c} -log_2(e - LFE(e)) - M * F$$

|

||||

|

||||

Where:

|

||||

|

||||

\begin{itemize}

|

||||

\item $e$ is the current epoch, going from epoch $0$ to $e_c$, the current epoch

|

||||

\item $LFE(e)$ is the last finalized epoch before $e$

|

||||

\item $M$ is a very large constant

|

||||

\item $F$ is 1 if a safety failure has taken place, otherwise 0

|

||||

\end{itemize}

|

||||

|

||||

The second term in the function is easy to justify: safety failures are very bad. The first term is trickier. To see how the first term works, consider the case where every epoch such that $e$ mod $N$, for some $N$, is zero is finalized and other epochs are not. The average total over each $N$-epoch slice will be roughly $\sum_{i=1}^N -log_2(i) \approx N * (log_2(N) - \frac{1}{ln(2)})$. Hence, the utility per block will be roughly $-log_2(N)$. This basically states that a blockchain with some finality time $N$ has utility roughly $-log(N)$, or in other words \textit{increasing the finality time of a blockchain by a constant factor causes a constant loss of utility}. The utility difference between 1 minute finality and 2 minute finality is the same as the utility difference between 1 hour finality and 2 hour finality.

|

||||

|

||||

This can be justified in two ways. First, one can intuitively argue that a user's psychological estimation of the discomfort of waiting for finality roughly matches this kind of logarithmic utility schedule. At the very least, it should be clear that the difference between 3600 second finality and 3610 second finality feels much more negligible than the difference between 1 second finality and 11 second finality, and so the claim that the difference between 10 second finality and 20 second finality is similar to the difference between 1 hour finality and 2 hour finality should not seem farfetched. Second, one can look at various blockchain use cases, and see that they are roughly logarithmically uniformly distributed along the range of finality times between around 200 miliseconds (``Starcraft on the blockchain") and one week (land registries and the like).

|

||||

|

||||

Now, we need to show that, for any given total deposit size, $\frac{loss\_to\_protocol\_utility}{validator\_penalties}$ is bounded. There are two ways to reduce protocol utility: (i) cause a safety failure, and (ii) have $\ge \frac{1}{3}$ of validators not prepare or not commit to prevent finality. In the first case, validators lose a large amount of deposits for violating the slashing conditions. In the second case, in a chain that has not been finalized for $e - LFE$ epochs, the penalty to attackers is $$min(NPP * \frac{1}{3} + NPCP(\frac{1}{3}), NCP * \frac{1}{3} + NCCP(\frac{1}{3})) * BP(D, e, LFE)$$

|

||||

|

||||

To enforce a ratio between validator losses and loss to protocol utility, we set:

|

||||

|

||||

$$BP(D, e, LFE) = \frac{k}{D^p} + k_2 * floor(log_2(e - LFE))$$

|

||||

|

||||

The first term serves to take profits for non-committers away; the second term creates a penalty which is proportional to the loss in protocol utility.

|

||||

|

||||

This connection between validator losses and loss to protocol utility has several consequences. First, it establishes that harming the protocolexecution is costly, and harming the protocol execution more costs more. Second, it establishes that the protocol approximates the properties of a \textit{potential game} [cite]. Potential games have the property that Nash equilibria of the game correspond to local maxima of the potential function (in this case, protocol utility), and so correctly following the protocol is a Nash equilibrium even in cases where a coalition has more than $\frac{1}{3}$ of the total validators. Here, the protocol utility function is not a perfect potential function, as it does not always take into account changes in the \textit{quantity} of prepares and commits whereas validator rewards do, but it does come close.

|

||||

|

||||

\section{Griefing factor analysis}

|

||||

|

||||

Griefing factor analysis is important because it provides one way to quanitfy the risk to honest validators. In general, if all validators are honest, and if network latency stays below the length of an epoch, then validators face zero risk beyond the usual risks of losing or accidentally divulging access to their private keys. In the case where malicious validators exist, however, they can interfere in the protocol in ways that cause harm to both themselves and honest validators.

|

||||

|

||||

We can approximately define the "griefing factor" as follows:

|

||||

|

||||

\begin{definition}

|

||||

A strategy used by a coalition in a given mechanism exhibits a \textit{griefing factor} $B$ if it can be shown that this strategy imposes a loss of $B * x$ to those outside the coalition at the cost of a loss of $x$ to those inside the coalition. If all strategies that cause deviations from some given baseline state exhibit griefing factors less than or equal to some bound B, then we call B a \textit{griefing factor bound}.

|

||||

\end{definition}

|

||||

|

||||

A strategy that imposes a loss to outsiders either at no cost to a coalition, or to the benefit of a coalition, is said to have a griefing factor of infinity. Proof of work blockchains have a griefing factor bound of infinity because a 51\% coalition can double its revenue by refusing to include blocks from other participants and waiting for difficulty adjustment to reduce the difficulty. With selfish mining, the griefing factor may be infinity for coalitions of size as low as 23.21\%.

|

||||

|

||||

Let us start off our griefing analysis by not taking into account validator churn, so the validator set is always the same. In Casper, we can identify the following deviating strategies:

|

||||

|

||||

\begin{enumerate}

|

||||

\item A minority of validators do not prepare, or prepare incorrect values.

|

||||

\item (Mirror image of 1) A censorship attack where a majority of validators does not accept prepares from a minority of validators (or other isomorphic attacks such as waiting for the minority to prepare hash $H1$ and then preparing $H2$, making $H2$ the dominant chain and denying the victims their rewards)

|

||||

\item A minority of validators do not commit.

|

||||

\item (Mirror image of 3) A censorship attack where a majority of validators does not accept commits from a minority of validators

|

||||

\end{enumerate}

|

||||

|

||||

Notice that, from the point of view of griefing factor analysis, it is immaterial whether or not any hash in a given epoch was justified or finalized. The Casper mechanism only pays attention to finalization in order to calculate $BP(D, e, LFE)$, the penalty scaling factor. This value scales penalties evenly for all participants, so it does not affect griefing factors.

|

||||

|

||||

Let us now analyze the attack types:

|

||||

|

||||

\begin{tabularx}{\textwidth}{|X|X|X|}

|

||||

\hline

|

||||

Attack & Amount lost by attacker & Amount lost by victims \\

|

||||

\hline

|

||||

Minority of size $\alpha < \frac{1}{2}$ non-prepares & $NPP * \alpha + NPCP(\alpha) * \alpha$ & $NPCP(\alpha) * (1-\alpha)$ \\

|

||||

Majority censors $\alpha < \frac{1}{2}$ prepares & $NPCP(\alpha) * (1-\alpha)$ & $NPP * \alpha + NPCP(\alpha) * \alpha$ \\

|

||||

Minority of size $\alpha < \frac{1}{2}$ non-commits & $NCP * \alpha + NCCP(\alpha) * \alpha$ & $NCCP(\alpha) * (1-\alpha)$ \\

|

||||

Majority censors $\alpha < \frac{1}{2}$ commits & $NCCP(\alpha) * (1-\alpha)$ & $NCP * \alpha + NCCP(\alpha) * \alpha$ \\

|

||||

\hline

|

||||

\end{tabularx}

|

||||

|

||||

In general, we see a perfect symmetry between the non-commit case and the non-prepare case, so we can assume $\frac{NCCP(\alpha)}{NCP} = \frac{NPCP(\alpha)}{NPP}$. Also, from a protocol utility standpoint, we can make the observation that seeing $\frac{1}{3} \le p_c < \frac{2}{3}$ commits is better than seeing fewer commits, as it gives at least some economic security against finality reversions, so we do want to reward this scenario more than the scenario where we get $\frac{1}{3} \le p_c < \frac{2}{3}$ prepares. Another way to view the situation is to observe that $\frac{1}{3}$ non-prepares causes \textit{everyone} to non-commit, so it should be treated with equal severity.

|

||||

|

||||

In the normal case, anything less than $\frac{1}{3}$ commits provides no economic security, so we can treat $p_c < \frac{1}{3}$ commits as equivalent to no commits; this thus suggests $NPP = 2 * NCP$. We can also normalize $NCP = 1$.

|

||||

|

||||

Now, let us analyze the griefing factors, to try to determine an optimal shape for $NCCP$. The griefing factor for non-committing is:

|

||||

|

||||

$$\frac{(1-\alpha) * NCCP(\alpha)}{\alpha * (1 + NCCP(\alpha))}$$

|

||||

|

||||

The griefing factor for censoring is the inverse of this. If we want the griefing factor for non-committing to equal one, then we could compute:

|

||||

|

||||