Merge branch 'main' into deploy-app-dev

192

docs/UsingSpiffdemo/Getting_Started.md

Normal file

@ -0,0 +1,192 @@

|

||||

# Using Spiffdemo.org

|

||||

Spiffdemo is a demo version of Spiffworkflow, offering a limited set of functions and features.

|

||||

It provides users a platform to explore workflow concepts through a collection of top-level examples, diagrams, and workflows.

|

||||

Users can interact with pre-built models, make modifications, and visualize process flows.

|

||||

Spiffdemo serves as a demonstration version of Spiffworkflow, providing a glimpse into its capabilities with limited functionality.

|

||||

Spiffworkflow, on the other hand, is a full-fledged tool that offers a broader range of features for creating and managing workflows.

|

||||

|

||||

| Category | Spiffdemo (Demo Version) | SpiffWorkflow (Full Version) |

|

||||

|---------|-------------------------|------------------------------|

|

||||

| Functionality | Limited set of functions and features | Comprehensive set of features and functions for creating, managing, and optimizing process models and workflows |

|

||||

| Purpose | Provides a platform for exploration and demonstration of workflow concepts | Enables users to create, manage, and optimize their own process models and workflows for their specific needs and requirements |

|

||||

| Features | Limited functionality for interacting with pre-built models and making modifications | Advanced features such as task management, process modeling, diagram editing, and customizable properties |

|

||||

|

||||

## How to Login to Spiffdemo

|

||||

To begin your journey with Spiffdemo, open your web browser and navigate to the official Spiffdemo website.

|

||||

|

||||

On the login screen, you will find the option to log in using Single Sign-On.

|

||||

Click the Single Sign-On button and select your preferred login method, such as using your Gmail account.

|

||||

|

||||

<p align="center">

|

||||

<img src="https://lh3.googleusercontent.com/lfwuApYvOV1336IIbaQnh63niw6mmLJkpyFjM6lm3oHXClzwSh9O7l4q6CGmIjLrTRrHd_DzRGP7E-Km7IcD-zg0PZmw2IpTLjzQgTCiJSASZqFplvhHCfmXMvHcKDotNAYwRIcAEWYSrlLuka4U8Nk" alt="drawing" width="400"/>

|

||||

|

||||

|

||||

```{admonition} Note: Stay tuned as we expand our sign-on options beyond Gmail.

|

||||

More ways to access Spiffdemo are coming your way!

|

||||

```

|

||||

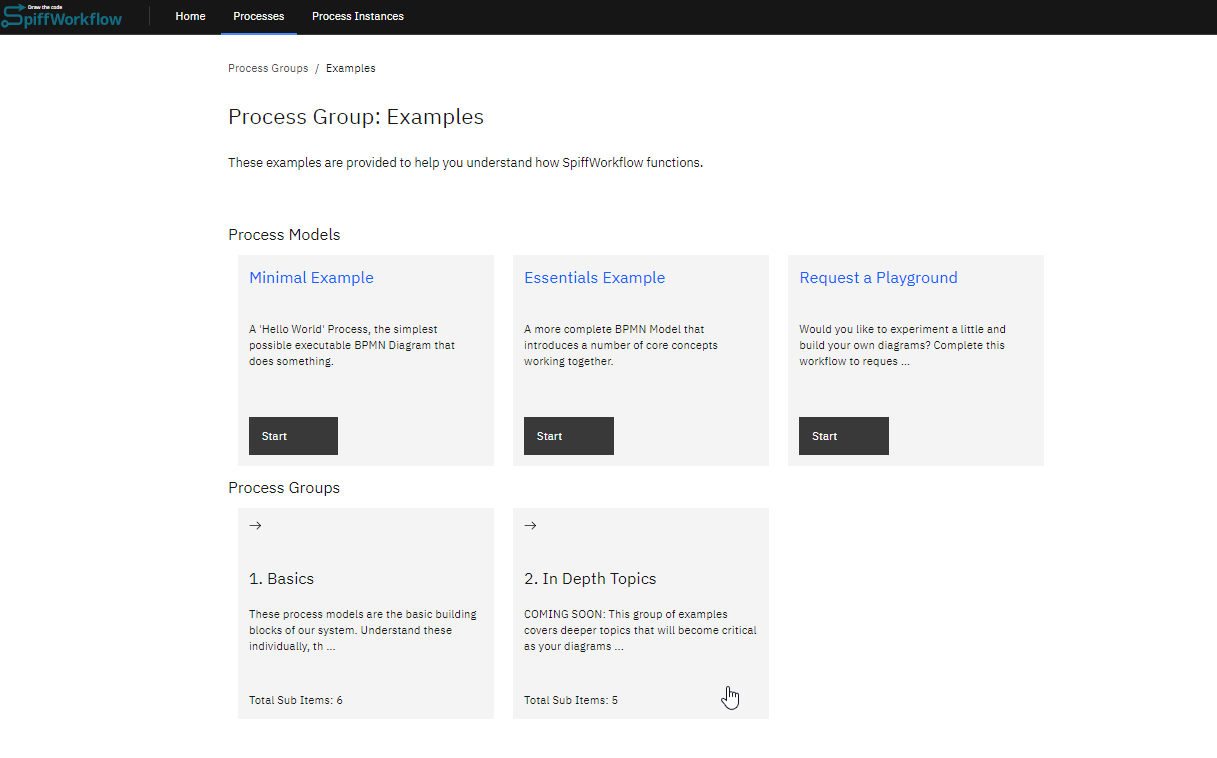

## Exploring the Examples

|

||||

|

||||

When logging into the dashboard, it is crucial to familiarize yourself with the functions it offers and how the underlying engine operates.

|

||||

In the demo website, we will explore two examples: the Minimal Example and the Essential Example, to provide a clear understanding of the process.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Minimal Example

|

||||

|

||||

Let's begin with the Minimal Example, which serves as a "Hello World" process—a simple executable BPMN Diagram designed to demonstrate basic functionality.

|

||||

Rather than immediately starting the process, we will first examine its structure.

|

||||

|

||||

|

||||

|

||||

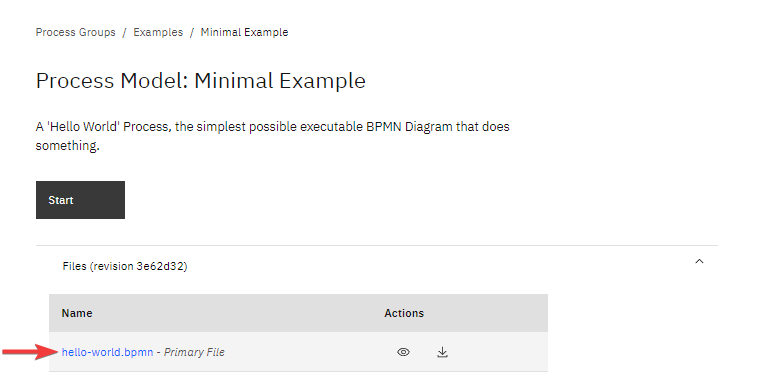

#### Access the Process Directory

|

||||

|

||||

Clicking on the process name will open the directory dedicated to the Minimal Example process.

|

||||

From here, you can start the process if desired, but for the purpose of this example, we will proceed with an explanation of its components and functionality.

|

||||

Therefore, Locate and click on the .bpmn file to access the BPMN editor.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

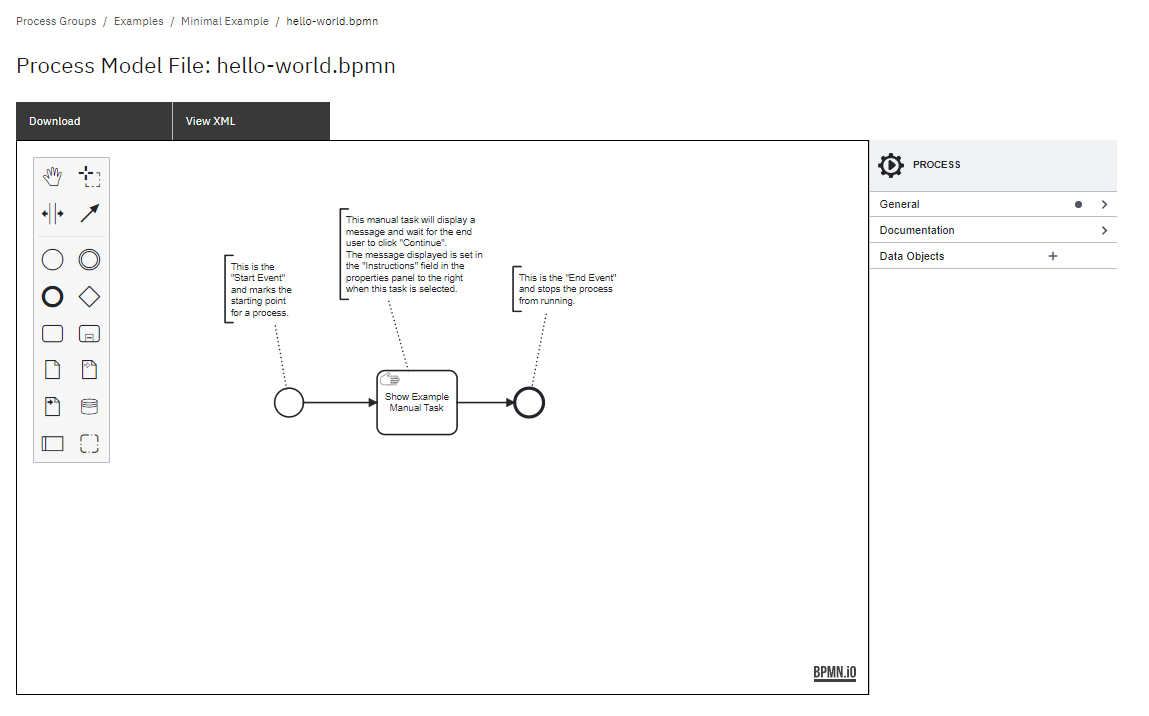

The BPMN editor provides a visual representation of the process workflow.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

#### Understand the Process Workflow

|

||||

|

||||

|

||||

|

||||

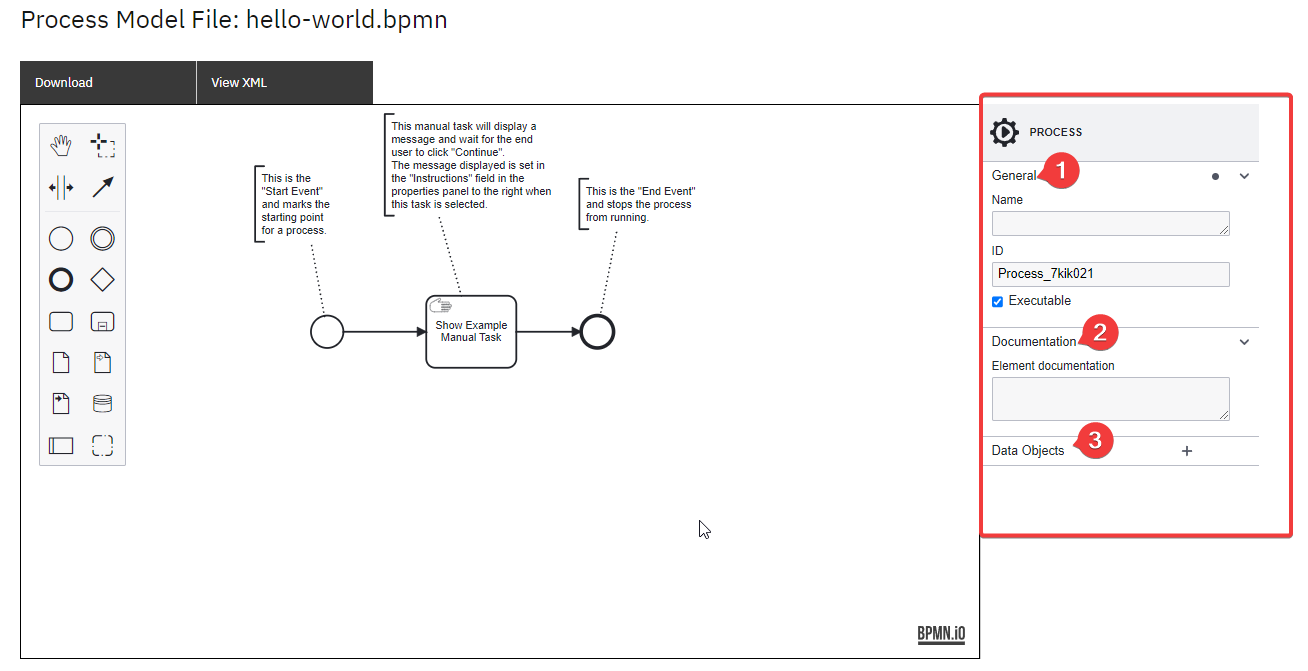

The Minimal Example process consists of three key elements: a start event, a manual task, and an end event.

|

||||

It is essential to understand the purpose and functionality of the properties panel, which is an integral component of the process diagram.

|

||||

Without selecting any specific task within the diagram editor, the properties panel will appear as follows:

|

||||

|

||||

|

||||

|

||||

General

|

||||

|

||||

- Name field is usually empty unless user wants to provide it.

|

||||

It serves as a label or identifier for the process.

|

||||

|

||||

- The ID is automatically populated by the system (default behavior) however it can be updated by the user, but it must remain unique within the other processes.

|

||||

|

||||

- By default, all processes are executable, which means the engine can run the process.

|

||||

|

||||

|

||||

Documentation

|

||||

|

||||

- This field can be used to provide any notes related to the process.

|

||||

|

||||

|

||||

Data Objects

|

||||

|

||||

- Used to configure Data Objects added to the process.

|

||||

See full article [here](https://medium.com/@danfunk/understanding-bpmns-data-objects-with-spiffworkflow-26e195e23398).

|

||||

|

||||

|

||||

|

||||

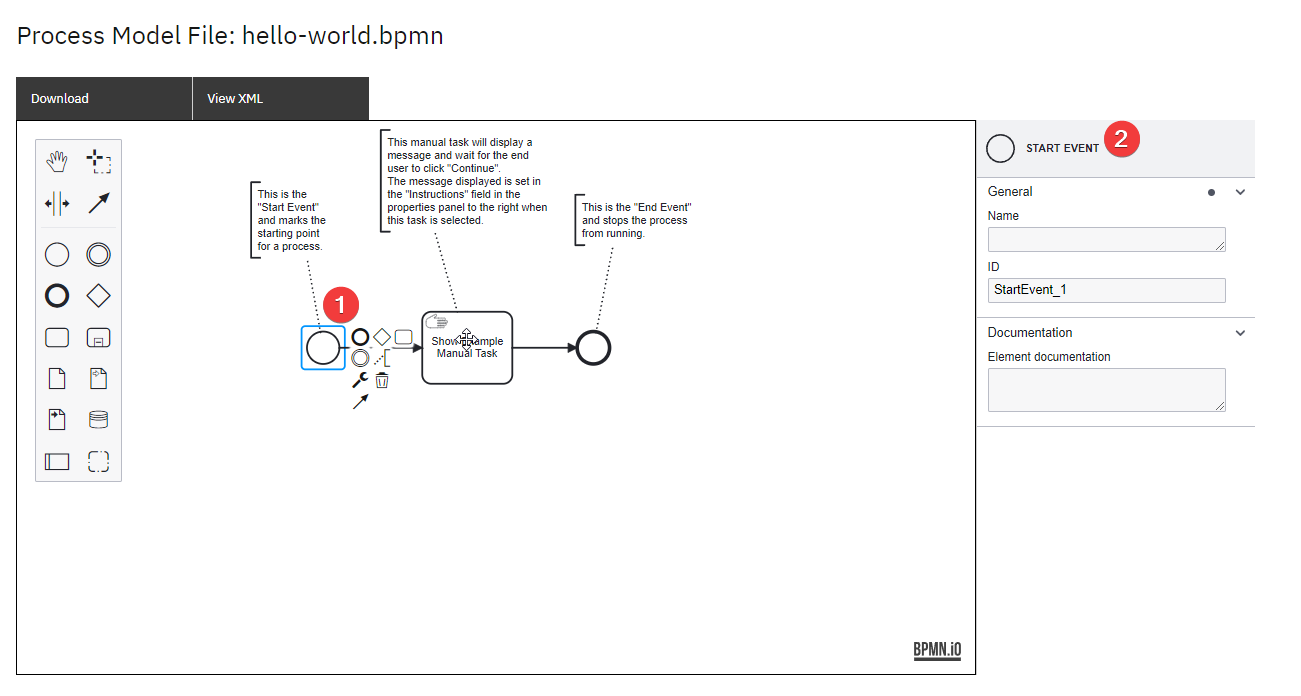

**1. Start Event**

|

||||

|

||||

|

||||

The first event in the minimal example is the start event.

|

||||

Each process diagram begins with a Start Event.

|

||||

Now explore the properties panel when you click on the first process of the diagram, “Start Event”.

|

||||

|

||||

|

||||

|

||||

General

|

||||

|

||||

- The Name for a Start Event is often left blank unless it needs to be named to provide more clarity on the flow or to be able to view this name in Process Instance logs.

|

||||

|

||||

- ID is automatically populated by the system (default behavior) however it can be updated by the user, but it must remain unique within the process.

|

||||

Often the ID would be updated to allow easier referencing in messages and also Logs as long as it’s unique in the process.

|

||||

|

||||

|

||||

Documentation

|

||||

|

||||

- This field is used to provide any notes related to the element.

|

||||

|

||||

|

||||

```{admonition} Note: In the minimal example, the Start Event is a None Start Event.

|

||||

This type of Start Event signifies that the process can be initiated without any triggering message or timer event.

|

||||

It is worth noting that there are other types of Start Events available, such as Message Start Events and Timer Start Events.

|

||||

These advanced Start Events will be discussed in detail in the subsequent sections, providing further insights into their specific use cases and functionalities.

|

||||

```

|

||||

|

||||

|

||||

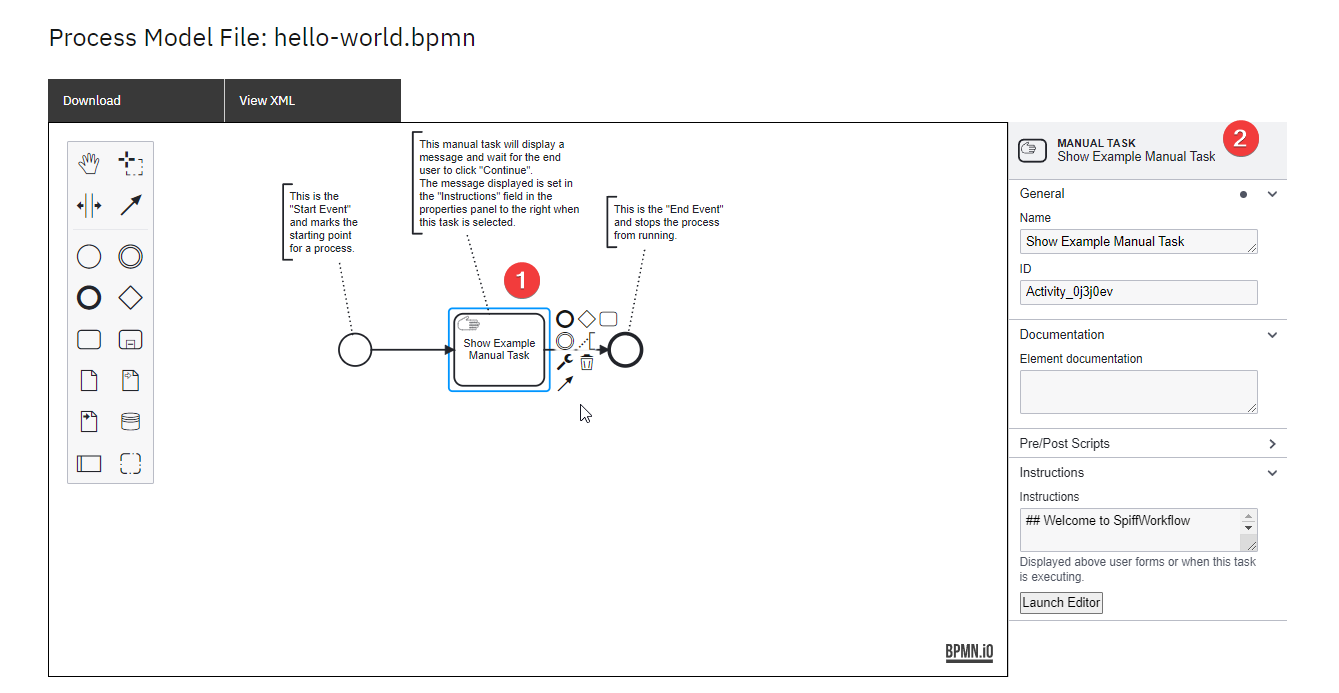

**2. Manual Task**

|

||||

|

||||

Within the process flow, the next step is a manual task.

|

||||

A Manual Task is another type of BPMN Task requiring human involvement.

|

||||

Manual Tasks do not require user input other than clicking a button to acknowledge the completion of a task that usually occurs outside of the process.

|

||||

|

||||

Now explore the properties panel when you click on the first process of the process of “Show Example Manual Task”.

|

||||

|

||||

|

||||

|

||||

Panel General Section

|

||||

|

||||

- Enter/Edit the User Task name in this section.

|

||||

Alternatively, double-click on the User Task in the diagram.

|

||||

|

||||

- The ID is automatically entered and can be edited for improved referencing in error messages, but it must be unique within the process.

|

||||

|

||||

|

||||

Documentation Section

|

||||

|

||||

- This field is used to provide any notes related to the element.

|

||||

|

||||

|

||||

SpiffWorkflow Scripts

|

||||

|

||||

- Pre-Script: Updates Task Data using Python prior to execution of the Activity.

|

||||

|

||||

- Post-Script: Updates Task Data using Python immediately after execution of the Activity.

|

||||

|

||||

|

||||

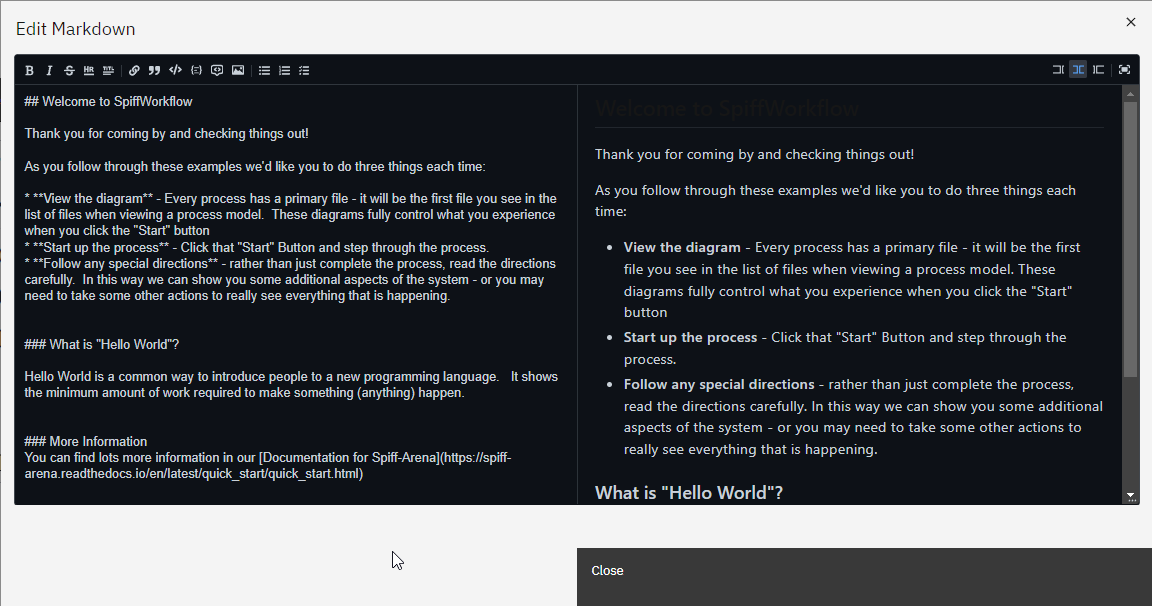

Instructions

|

||||

|

||||

During the execution of this task, the following instructions will be displayed to the end user.

|

||||

This section serves as a means to format and present information to the user.

|

||||

The formatting is achieved through a combination of Markdown and Jinja.

|

||||

To view and edit the instructions, click on the editor, and a window will open displaying the instructions in the specified format.

|

||||

|

||||

|

||||

|

||||

|

||||

3. ##### End Task

|

||||

|

||||

|

||||

The next process in the workflow is an end task.

|

||||

A BPMN diagram should contain an end event for every distinct end state of a process.

|

||||

|

||||

Now explore the properties panel when you click on the last end event process:

|

||||

|

||||

|

||||

|

||||

General

|

||||

|

||||

- The Name for a Start Event is often left blank unless it needs to be named to provide more clarity on the flow or to be able to view this name in Process Instance logs.

|

||||

|

||||

- ID is automatically populated by the system (default behavior) however the user can update it, but it must remain unique within the process.

|

||||

|

||||

|

||||

Documentation

|

||||

|

||||

- This field is used to provide any notes related to the element.

|

||||

|

||||

|

||||

Instructions

|

||||

|

||||

- These are the Instructions for the End User, which will be displayed when this task is executed.You can click on launch editor to see the markdown file.

|

||||

|

||||

|

||||

|

||||

BIN

docs/UsingSpiffdemo/Images/End_Task_Properties.png

Normal file

|

After Width: | Height: | Size: 11 KiB |

BIN

docs/UsingSpiffdemo/Images/Instructions_panel.png

Normal file

|

After Width: | Height: | Size: 5.1 KiB |

BIN

docs/UsingSpiffdemo/Images/Login.png

Normal file

|

After Width: | Height: | Size: 31 KiB |

BIN

docs/UsingSpiffdemo/Images/Manual_task.png

Normal file

|

After Width: | Height: | Size: 46 KiB |

BIN

docs/UsingSpiffdemo/Images/Manual_task_Properties.png

Normal file

|

After Width: | Height: | Size: 14 KiB |

BIN

docs/UsingSpiffdemo/Images/Manual_task_Properties1.png

Normal file

|

After Width: | Height: | Size: 13 KiB |

BIN

docs/UsingSpiffdemo/Images/Manual_task_Properties12.png

Normal file

|

After Width: | Height: | Size: 13 KiB |

BIN

docs/UsingSpiffdemo/Images/Manual_task_instructions_panel.png

Normal file

|

After Width: | Height: | Size: 62 KiB |

BIN

docs/UsingSpiffdemo/Images/Navigating_Process.png

Normal file

|

After Width: | Height: | Size: 22 KiB |

BIN

docs/UsingSpiffdemo/Images/Propertise_panel.png

Normal file

|

After Width: | Height: | Size: 10 KiB |

BIN

docs/UsingSpiffdemo/Images/Start_Event_Properties.png

Normal file

|

After Width: | Height: | Size: 7.7 KiB |

BIN

docs/UsingSpiffdemo/Images/Start_Event_Properties1.png

Normal file

|

After Width: | Height: | Size: 7.7 KiB |

34

spiffworkflow-backend/migrations/versions/881cdb50a567_.py

Normal file

@ -0,0 +1,34 @@

|

||||

"""empty message

|

||||

|

||||

Revision ID: 881cdb50a567

|

||||

Revises: 377be1608b45

|

||||

Create Date: 2023-06-20 15:26:28.087551

|

||||

|

||||

"""

|

||||

from alembic import op

|

||||

import sqlalchemy as sa

|

||||

|

||||

|

||||

# revision identifiers, used by Alembic.

|

||||

revision = '881cdb50a567'

|

||||

down_revision = '377be1608b45'

|

||||

branch_labels = None

|

||||

depends_on = None

|

||||

|

||||

|

||||

def upgrade():

|

||||

# ### commands auto generated by Alembic - please adjust! ###

|

||||

with op.batch_alter_table('task', schema=None) as batch_op:

|

||||

batch_op.add_column(sa.Column('saved_form_data_hash', sa.String(length=255), nullable=True))

|

||||

batch_op.create_index(batch_op.f('ix_task_saved_form_data_hash'), ['saved_form_data_hash'], unique=False)

|

||||

|

||||

# ### end Alembic commands ###

|

||||

|

||||

|

||||

def downgrade():

|

||||

# ### commands auto generated by Alembic - please adjust! ###

|

||||

with op.batch_alter_table('task', schema=None) as batch_op:

|

||||

batch_op.drop_index(batch_op.f('ix_task_saved_form_data_hash'))

|

||||

batch_op.drop_column('saved_form_data_hash')

|

||||

|

||||

# ### end Alembic commands ###

|

||||

@ -1846,12 +1846,6 @@ paths:

|

||||

description: The unique id of an existing process instance.

|

||||

schema:

|

||||

type: integer

|

||||

- name: save_as_draft

|

||||

in: query

|

||||

required: false

|

||||

description: Save the data to task but do not complete it.

|

||||

schema:

|

||||

type: boolean

|

||||

get:

|

||||

tags:

|

||||

- Tasks

|

||||

@ -1888,6 +1882,44 @@ paths:

|

||||

schema:

|

||||

$ref: "#/components/schemas/OkTrue"

|

||||

|

||||

/tasks/{process_instance_id}/{task_guid}/save-draft:

|

||||

parameters:

|

||||

- name: task_guid

|

||||

in: path

|

||||

required: true

|

||||

description: The unique id of an existing process group.

|

||||

schema:

|

||||

type: string

|

||||

- name: process_instance_id

|

||||

in: path

|

||||

required: true

|

||||

description: The unique id of an existing process instance.

|

||||

schema:

|

||||

type: integer

|

||||

post:

|

||||

tags:

|

||||

- Tasks

|

||||

operationId: spiffworkflow_backend.routes.tasks_controller.task_save_draft

|

||||

summary: Update the draft form for this task

|

||||

requestBody:

|

||||

content:

|

||||

application/json:

|

||||

schema:

|

||||

$ref: "#/components/schemas/ProcessGroup"

|

||||

responses:

|

||||

"200":

|

||||

description: One task

|

||||

content:

|

||||

application/json:

|

||||

schema:

|

||||

$ref: "#/components/schemas/Task"

|

||||

"202":

|

||||

description: "ok: true"

|

||||

content:

|

||||

application/json:

|

||||

schema:

|

||||

$ref: "#/components/schemas/OkTrue"

|

||||

|

||||

/tasks/{process_instance_id}/send-user-signal-event:

|

||||

parameters:

|

||||

- name: process_instance_id

|

||||

|

||||

@ -37,6 +37,10 @@ class JsonDataDict(TypedDict):

|

||||

# for added_key in added_keys:

|

||||

# added[added_key] = b[added_key]

|

||||

# final_tuple = [added, removed, changed]

|

||||

|

||||

|

||||

# to find the users of this model run:

|

||||

# grep -R '_data_hash: ' src/spiffworkflow_backend/models/

|

||||

class JsonDataModel(SpiffworkflowBaseDBModel):

|

||||

__tablename__ = "json_data"

|

||||

id: int = db.Column(db.Integer, primary_key=True)

|

||||

|

||||

@ -63,11 +63,13 @@ class TaskModel(SpiffworkflowBaseDBModel):

|

||||

|

||||

json_data_hash: str = db.Column(db.String(255), nullable=False, index=True)

|

||||

python_env_data_hash: str = db.Column(db.String(255), nullable=False, index=True)

|

||||

saved_form_data_hash: str | None = db.Column(db.String(255), nullable=True, index=True)

|

||||

|

||||

start_in_seconds: float | None = db.Column(db.DECIMAL(17, 6))

|

||||

end_in_seconds: float | None = db.Column(db.DECIMAL(17, 6))

|

||||

|

||||

data: dict | None = None

|

||||

saved_form_data: dict | None = None

|

||||

|

||||

# these are here to be compatible with task api

|

||||

form_schema: dict | None = None

|

||||

@ -89,6 +91,11 @@ class TaskModel(SpiffworkflowBaseDBModel):

|

||||

def json_data(self) -> dict:

|

||||

return JsonDataModel.find_data_dict_by_hash(self.json_data_hash)

|

||||

|

||||

def get_saved_form_data(self) -> dict | None:

|

||||

if self.saved_form_data_hash is not None:

|

||||

return JsonDataModel.find_data_dict_by_hash(self.saved_form_data_hash)

|

||||

return None

|

||||

|

||||

|

||||

class Task:

|

||||

HUMAN_TASK_TYPES = ["User Task", "Manual Task"]

|

||||

|

||||

@ -286,6 +286,7 @@ def task_show(process_instance_id: int, task_guid: str = "next") -> flask.wrappe

|

||||

can_complete = False

|

||||

|

||||

task_model.data = task_model.get_data()

|

||||

task_model.saved_form_data = task_model.get_saved_form_data()

|

||||

task_model.process_model_display_name = process_model.display_name

|

||||

task_model.process_model_identifier = process_model.id

|

||||

task_model.typename = task_definition.typename

|

||||

@ -463,11 +464,49 @@ def interstitial(process_instance_id: int) -> Response:

|

||||

)

|

||||

|

||||

|

||||

def task_save_draft(

|

||||

process_instance_id: int,

|

||||

task_guid: str,

|

||||

body: dict[str, Any],

|

||||

) -> flask.wrappers.Response:

|

||||

principal = _find_principal_or_raise()

|

||||

process_instance = _find_process_instance_by_id_or_raise(process_instance_id)

|

||||

if not process_instance.can_submit_task():

|

||||

raise ApiError(

|

||||

error_code="process_instance_not_runnable",

|

||||

message=(

|

||||

f"Process Instance ({process_instance.id}) has status "

|

||||

f"{process_instance.status} which does not allow tasks to be submitted."

|

||||

),

|

||||

status_code=400,

|

||||

)

|

||||

AuthorizationService.assert_user_can_complete_task(process_instance.id, task_guid, principal.user)

|

||||

task_model = _get_task_model_from_guid_or_raise(task_guid, process_instance_id)

|

||||

json_data_dict = TaskService.update_task_data_on_task_model_and_return_dict_if_updated(

|

||||

task_model, body, "saved_form_data_hash"

|

||||

)

|

||||

if json_data_dict is not None:

|

||||

JsonDataModel.insert_or_update_json_data_dict(json_data_dict)

|

||||

db.session.add(task_model)

|

||||

db.session.commit()

|

||||

|

||||

return Response(

|

||||

json.dumps(

|

||||

{

|

||||

"ok": True,

|

||||

"process_model_identifier": process_instance.process_model_identifier,

|

||||

"process_instance_id": process_instance_id,

|

||||

}

|

||||

),

|

||||

status=200,

|

||||

mimetype="application/json",

|

||||

)

|

||||

|

||||

|

||||

def _task_submit_shared(

|

||||

process_instance_id: int,

|

||||

task_guid: str,

|

||||

body: dict[str, Any],

|

||||

save_as_draft: bool = False,

|

||||

) -> flask.wrappers.Response:

|

||||

principal = _find_principal_or_raise()

|

||||

process_instance = _find_process_instance_by_id_or_raise(process_instance_id)

|

||||

@ -494,59 +533,34 @@ def _task_submit_shared(

|

||||

)

|

||||

)

|

||||

|

||||

# multi-instance code from crconnect - we may need it or may not

|

||||

# if terminate_loop and spiff_task.is_looping():

|

||||

# spiff_task.terminate_loop()

|

||||

#

|

||||

# If we need to update all tasks, then get the next ready task and if it a multi-instance with the same

|

||||

# task spec, complete that form as well.

|

||||

# if update_all:

|

||||

# last_index = spiff_task.task_info()["mi_index"]

|

||||

# next_task = processor.next_task()

|

||||

# while next_task and next_task.task_info()["mi_index"] > last_index:

|

||||

# __update_task(processor, next_task, form_data, user)

|

||||

# last_index = next_task.task_info()["mi_index"]

|

||||

# next_task = processor.next_task()

|

||||

human_task = _find_human_task_or_raise(

|

||||

process_instance_id=process_instance_id,

|

||||

task_guid=task_guid,

|

||||

only_tasks_that_can_be_completed=True,

|

||||

)

|

||||

|

||||

if save_as_draft:

|

||||

task_model = _get_task_model_from_guid_or_raise(task_guid, process_instance_id)

|

||||

ProcessInstanceService.update_form_task_data(process_instance, spiff_task, body, g.user)

|

||||

json_data_dict = TaskService.update_task_data_on_task_model_and_return_dict_if_updated(

|

||||

task_model, spiff_task.data, "json_data_hash"

|

||||

)

|

||||

if json_data_dict is not None:

|

||||

JsonDataModel.insert_or_update_json_data_dict(json_data_dict)

|

||||

db.session.add(task_model)

|

||||

db.session.commit()

|

||||

else:

|

||||

human_task = _find_human_task_or_raise(

|

||||

process_instance_id=process_instance_id,

|

||||

task_guid=task_guid,

|

||||

only_tasks_that_can_be_completed=True,

|

||||

)

|

||||

with sentry_sdk.start_span(op="task", description="complete_form_task"):

|

||||

with ProcessInstanceQueueService.dequeued(process_instance):

|

||||

ProcessInstanceService.complete_form_task(

|

||||

processor=processor,

|

||||

spiff_task=spiff_task,

|

||||

data=body,

|

||||

user=g.user,

|

||||

human_task=human_task,

|

||||

)

|

||||

|

||||

with sentry_sdk.start_span(op="task", description="complete_form_task"):

|

||||

with ProcessInstanceQueueService.dequeued(process_instance):

|

||||

ProcessInstanceService.complete_form_task(

|

||||

processor=processor,

|

||||

spiff_task=spiff_task,

|

||||

data=body,

|

||||

user=g.user,

|

||||

human_task=human_task,

|

||||

)

|

||||

|

||||

next_human_task_assigned_to_me = (

|

||||

HumanTaskModel.query.filter_by(process_instance_id=process_instance_id, completed=False)

|

||||

.order_by(asc(HumanTaskModel.id)) # type: ignore

|

||||

.join(HumanTaskUserModel)

|

||||

.filter_by(user_id=principal.user_id)

|

||||

.first()

|

||||

)

|

||||

if next_human_task_assigned_to_me:

|

||||

return make_response(jsonify(HumanTaskModel.to_task(next_human_task_assigned_to_me)), 200)

|

||||

elif processor.next_task():

|

||||

task = ProcessInstanceService.spiff_task_to_api_task(processor, processor.next_task())

|

||||

return make_response(jsonify(task), 200)

|

||||

next_human_task_assigned_to_me = (

|

||||

HumanTaskModel.query.filter_by(process_instance_id=process_instance_id, completed=False)

|

||||

.order_by(asc(HumanTaskModel.id)) # type: ignore

|

||||

.join(HumanTaskUserModel)

|

||||

.filter_by(user_id=principal.user_id)

|

||||

.first()

|

||||

)

|

||||

if next_human_task_assigned_to_me:

|

||||

return make_response(jsonify(HumanTaskModel.to_task(next_human_task_assigned_to_me)), 200)

|

||||

elif processor.next_task():

|

||||

task = ProcessInstanceService.spiff_task_to_api_task(processor, processor.next_task())

|

||||

return make_response(jsonify(task), 200)

|

||||

|

||||

return Response(

|

||||

json.dumps(

|

||||

@ -565,10 +579,9 @@ def task_submit(

|

||||

process_instance_id: int,

|

||||

task_guid: str,

|

||||

body: dict[str, Any],

|

||||

save_as_draft: bool = False,

|

||||

) -> flask.wrappers.Response:

|

||||

with sentry_sdk.start_span(op="controller_action", description="tasks_controller.task_submit"):

|

||||

return _task_submit_shared(process_instance_id, task_guid, body, save_as_draft)

|

||||

return _task_submit_shared(process_instance_id, task_guid, body)

|

||||

|

||||

|

||||

def _get_tasks(

|

||||

|

||||

@ -61,7 +61,7 @@ class BaseTest:

|

||||

process_model_id: str | None = "random_fact",

|

||||

bpmn_file_name: str | None = None,

|

||||

bpmn_file_location: str | None = None,

|

||||

) -> str:

|

||||

) -> ProcessModelInfo:

|

||||

"""Creates a process group.

|

||||

|

||||

Creates a process model

|

||||

@ -83,13 +83,13 @@ class BaseTest:

|

||||

user=user,

|

||||

)

|

||||

|

||||

load_test_spec(

|

||||

process_model = load_test_spec(

|

||||

process_model_id=process_model_identifier,

|

||||

bpmn_file_name=bpmn_file_name,

|

||||

process_model_source_directory=bpmn_file_location,

|

||||

)

|

||||

|

||||

return process_model_identifier

|

||||

return process_model

|

||||

|

||||

def create_process_group(

|

||||

self,

|

||||

@ -188,7 +188,7 @@ class BaseTest:

|

||||

process_model_id: str,

|

||||

process_model_location: str | None = None,

|

||||

process_model: ProcessModelInfo | None = None,

|

||||

file_name: str = "random_fact.svg",

|

||||

file_name: str = "random_fact.bpmn",

|

||||

file_data: bytes = b"abcdef",

|

||||

user: UserModel | None = None,

|

||||

) -> Any:

|

||||

|

||||

@ -61,6 +61,9 @@ class ExampleDataLoader:

|

||||

)

|

||||

|

||||

files = sorted(glob.glob(file_glob))

|

||||

|

||||

if len(files) == 0:

|

||||

raise Exception(f"Could not find any files with file_glob: {file_glob}")

|

||||

for file_path in files:

|

||||

if os.path.isdir(file_path):

|

||||

continue # Don't try to process sub directories

|

||||

|

||||

@ -24,7 +24,7 @@ class TestNestedGroups(BaseTest):

|

||||

process_model_id = "manual_task"

|

||||

bpmn_file_name = "manual_task.bpmn"

|

||||

bpmn_file_location = "manual_task"

|

||||

process_model_identifier = self.create_group_and_model_with_bpmn(

|

||||

process_model = self.create_group_and_model_with_bpmn(

|

||||

client,

|

||||

with_super_admin_user,

|

||||

process_group_id=process_group_id,

|

||||

@ -34,13 +34,13 @@ class TestNestedGroups(BaseTest):

|

||||

)

|

||||

response = self.create_process_instance_from_process_model_id_with_api(

|

||||

client,

|

||||

process_model_identifier,

|

||||

process_model.id,

|

||||

self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

process_instance_id = response.json["id"]

|

||||

|

||||

client.post(

|

||||

f"/v1.0/process-instances/{self.modify_process_identifier_for_path_param(process_model_identifier)}/{process_instance_id}/run",

|

||||

f"/v1.0/process-instances/{self.modify_process_identifier_for_path_param(process_model.id)}/{process_instance_id}/run",

|

||||

headers=self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

process_instance = ProcessInstanceService().get_process_instance(process_instance_id)

|

||||

@ -79,7 +79,7 @@ class TestNestedGroups(BaseTest):

|

||||

process_model_id = "manual_task"

|

||||

bpmn_file_name = "manual_task.bpmn"

|

||||

bpmn_file_location = "manual_task"

|

||||

process_model_identifier = self.create_group_and_model_with_bpmn(

|

||||

process_model = self.create_group_and_model_with_bpmn(

|

||||

client,

|

||||

with_super_admin_user,

|

||||

process_group_id=process_group_id,

|

||||

@ -89,7 +89,7 @@ class TestNestedGroups(BaseTest):

|

||||

)

|

||||

response = self.create_process_instance_from_process_model_id_with_api(

|

||||

client,

|

||||

process_model_identifier,

|

||||

process_model.id,

|

||||

self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

process_instance_id = response.json["id"]

|

||||

|

||||

@ -0,0 +1,320 @@

|

||||

import json

|

||||

|

||||

from flask.app import Flask

|

||||

from flask.testing import FlaskClient

|

||||

from spiffworkflow_backend.models.db import db

|

||||

from spiffworkflow_backend.models.group import GroupModel

|

||||

from spiffworkflow_backend.models.human_task import HumanTaskModel

|

||||

from spiffworkflow_backend.models.process_instance import ProcessInstanceModel

|

||||

from spiffworkflow_backend.models.user import UserModel

|

||||

from spiffworkflow_backend.routes.tasks_controller import _dequeued_interstitial_stream

|

||||

from spiffworkflow_backend.services.authorization_service import AuthorizationService

|

||||

from spiffworkflow_backend.services.process_instance_processor import ProcessInstanceProcessor

|

||||

|

||||

from tests.spiffworkflow_backend.helpers.base_test import BaseTest

|

||||

|

||||

|

||||

class TestTasksController(BaseTest):

|

||||

def test_task_show(

|

||||

self,

|

||||

app: Flask,

|

||||

client: FlaskClient,

|

||||

with_db_and_bpmn_file_cleanup: None,

|

||||

with_super_admin_user: UserModel,

|

||||

) -> None:

|

||||

process_group_id = "my_process_group"

|

||||

process_model_id = "dynamic_enum_select_fields"

|

||||

bpmn_file_location = "dynamic_enum_select_fields"

|

||||

process_model = self.create_group_and_model_with_bpmn(

|

||||

client,

|

||||

with_super_admin_user,

|

||||

process_group_id=process_group_id,

|

||||

process_model_id=process_model_id,

|

||||

# bpmn_file_name=bpmn_file_name,

|

||||

bpmn_file_location=bpmn_file_location,

|

||||

)

|

||||

|

||||

headers = self.logged_in_headers(with_super_admin_user)

|

||||

response = self.create_process_instance_from_process_model_id_with_api(client, process_model.id, headers)

|

||||

assert response.json is not None

|

||||

process_instance_id = response.json["id"]

|

||||

|

||||

response = client.post(

|

||||

f"/v1.0/process-instances/{self.modify_process_identifier_for_path_param(process_model.id)}/{process_instance_id}/run",

|

||||

headers=self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

# Call this to assure all engine-steps are fully processed.

|

||||

_dequeued_interstitial_stream(process_instance_id)

|

||||

assert response.json is not None

|

||||

assert response.json["next_task"] is not None

|

||||

|

||||

human_tasks = (

|

||||

db.session.query(HumanTaskModel).filter(HumanTaskModel.process_instance_id == process_instance_id).all()

|

||||

)

|

||||

assert len(human_tasks) == 1

|

||||

human_task = human_tasks[0]

|

||||

response = client.get(

|

||||

f"/v1.0/tasks/{process_instance_id}/{human_task.task_id}",

|

||||

headers=self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

assert response.json is not None

|

||||

assert response.json["form_schema"]["definitions"]["Color"]["anyOf"][1]["title"] == "Green"

|

||||

|

||||

# if you set this in task data:

|

||||

# form_ui_hidden_fields = ["veryImportantFieldButOnlySometimes", "building.floor"]

|

||||

# you will get this ui schema:

|

||||

assert response.json["form_ui_schema"] == {

|

||||

"building": {"floor": {"ui:widget": "hidden"}},

|

||||

"veryImportantFieldButOnlySometimes": {"ui:widget": "hidden"},

|

||||

}

|

||||

|

||||

def test_interstitial_page(

|

||||

self,

|

||||

app: Flask,

|

||||

client: FlaskClient,

|

||||

with_db_and_bpmn_file_cleanup: None,

|

||||

with_super_admin_user: UserModel,

|

||||

) -> None:

|

||||

process_group_id = "my_process_group"

|

||||

process_model_id = "interstitial"

|

||||

bpmn_file_location = "interstitial"

|

||||

# Assure we have someone in the finance team

|

||||

finance_user = self.find_or_create_user("testuser2")

|

||||

AuthorizationService.import_permissions_from_yaml_file()

|

||||

process_model = self.create_group_and_model_with_bpmn(

|

||||

client,

|

||||

with_super_admin_user,

|

||||

process_group_id=process_group_id,

|

||||

process_model_id=process_model_id,

|

||||

bpmn_file_location=bpmn_file_location,

|

||||

)

|

||||

headers = self.logged_in_headers(with_super_admin_user)

|

||||

response = self.create_process_instance_from_process_model_id_with_api(client, process_model.id, headers)

|

||||

assert response.json is not None

|

||||

process_instance_id = response.json["id"]

|

||||

|

||||

response = client.post(

|

||||

f"/v1.0/process-instances/{self.modify_process_identifier_for_path_param(process_model.id)}/{process_instance_id}/run",

|

||||

headers=headers,

|

||||

)

|

||||

|

||||

assert response.json is not None

|

||||

assert response.json["next_task"] is not None

|

||||

assert response.json["next_task"]["state"] == "READY"

|

||||

assert response.json["next_task"]["title"] == "Script Task #2"

|

||||

|

||||

# Rather that call the API and deal with the Server Side Events, call the loop directly and covert it to

|

||||

# a list. It tests all of our code. No reason to test Flasks SSE support.

|

||||

stream_results = _dequeued_interstitial_stream(process_instance_id)

|

||||

results = list(stream_results)

|

||||

# strip the "data:" prefix and convert remaining string to dict.

|

||||

json_results = [json.loads(x[5:]) for x in results] # type: ignore

|

||||

# There should be 2 results back -

|

||||

# the first script task should not be returned (it contains no end user instructions)

|

||||

# The second script task should produce rendered jinja text

|

||||

# The Manual Task should then return a message as well.

|

||||

assert len(results) == 2

|

||||

assert json_results[0]["task"]["state"] == "READY"

|

||||

assert json_results[0]["task"]["title"] == "Script Task #2"

|

||||

assert json_results[0]["task"]["properties"]["instructionsForEndUser"] == "I am Script Task 2"

|

||||

assert json_results[1]["task"]["state"] == "READY"

|

||||

assert json_results[1]["task"]["title"] == "Manual Task"

|

||||

|

||||

response = client.put(

|

||||

f"/v1.0/tasks/{process_instance_id}/{json_results[1]['task']['id']}",

|

||||

headers=headers,

|

||||

)

|

||||

|

||||

assert response.json is not None

|

||||

|

||||

# we should now be on a task that does not belong to the original user, and the interstitial page should know this.

|

||||

results = list(_dequeued_interstitial_stream(process_instance_id))

|

||||

json_results = [json.loads(x[5:]) for x in results] # type: ignore

|

||||

assert len(results) == 1

|

||||

assert json_results[0]["task"]["state"] == "READY"

|

||||

assert json_results[0]["task"]["can_complete"] is False

|

||||

assert json_results[0]["task"]["title"] == "Please Approve"

|

||||

assert json_results[0]["task"]["properties"]["instructionsForEndUser"] == "I am a manual task in another lane"

|

||||

|

||||

# Suspending the task should still report that the user can not complete the task.

|

||||

process_instance = ProcessInstanceModel.query.filter_by(id=process_instance_id).first()

|

||||

processor = ProcessInstanceProcessor(process_instance)

|

||||

processor.suspend()

|

||||

processor.save()

|

||||

|

||||

results = list(_dequeued_interstitial_stream(process_instance_id))

|

||||

json_results = [json.loads(x[5:]) for x in results] # type: ignore

|

||||

assert len(results) == 1

|

||||

assert json_results[0]["task"]["state"] == "READY"

|

||||

assert json_results[0]["task"]["can_complete"] is False

|

||||

assert json_results[0]["task"]["title"] == "Please Approve"

|

||||

assert json_results[0]["task"]["properties"]["instructionsForEndUser"] == "I am a manual task in another lane"

|

||||

|

||||

# Complete task as the finance user.

|

||||

response = client.put(

|

||||

f"/v1.0/tasks/{process_instance_id}/{json_results[0]['task']['id']}",

|

||||

headers=self.logged_in_headers(finance_user),

|

||||

)

|

||||

|

||||

# We should now be on the end task with a valid message, even after loading it many times.

|

||||

list(_dequeued_interstitial_stream(process_instance_id))

|

||||

list(_dequeued_interstitial_stream(process_instance_id))

|

||||

results = list(_dequeued_interstitial_stream(process_instance_id))

|

||||

json_results = [json.loads(x[5:]) for x in results] # type: ignore

|

||||

assert len(json_results) == 1

|

||||

assert json_results[0]["task"]["state"] == "COMPLETED"

|

||||

assert json_results[0]["task"]["properties"]["instructionsForEndUser"] == "I am the end task"

|

||||

|

||||

def test_correct_user_can_get_and_update_a_task(

|

||||

self,

|

||||

app: Flask,

|

||||

client: FlaskClient,

|

||||

with_db_and_bpmn_file_cleanup: None,

|

||||

with_super_admin_user: UserModel,

|

||||

) -> None:

|

||||

initiator_user = self.find_or_create_user("testuser4")

|

||||

finance_user = self.find_or_create_user("testuser2")

|

||||

assert initiator_user.principal is not None

|

||||

assert finance_user.principal is not None

|

||||

AuthorizationService.import_permissions_from_yaml_file()

|

||||

|

||||

finance_group = GroupModel.query.filter_by(identifier="Finance Team").first()

|

||||

assert finance_group is not None

|

||||

|

||||

process_group_id = "finance"

|

||||

process_model_id = "model_with_lanes"

|

||||

bpmn_file_name = "lanes.bpmn"

|

||||

bpmn_file_location = "model_with_lanes"

|

||||

process_model = self.create_group_and_model_with_bpmn(

|

||||

client,

|

||||

with_super_admin_user,

|

||||

process_group_id=process_group_id,

|

||||

process_model_id=process_model_id,

|

||||

bpmn_file_name=bpmn_file_name,

|

||||

bpmn_file_location=bpmn_file_location,

|

||||

)

|

||||

|

||||

response = self.create_process_instance_from_process_model_id_with_api(

|

||||

client,

|

||||

process_model.id,

|

||||

headers=self.logged_in_headers(initiator_user),

|

||||

)

|

||||

assert response.status_code == 201

|

||||

|

||||

assert response.json is not None

|

||||

process_instance_id = response.json["id"]

|

||||

response = client.post(

|

||||

f"/v1.0/process-instances/{self.modify_process_identifier_for_path_param(process_model.id)}/{process_instance_id}/run",

|

||||

headers=self.logged_in_headers(initiator_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

|

||||

response = client.get(

|

||||

"/v1.0/tasks",

|

||||

headers=self.logged_in_headers(finance_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

assert response.json is not None

|

||||

assert len(response.json["results"]) == 0

|

||||

|

||||

response = client.get(

|

||||

"/v1.0/tasks",

|

||||

headers=self.logged_in_headers(initiator_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

assert response.json is not None

|

||||

assert len(response.json["results"]) == 1

|

||||

|

||||

task_id = response.json["results"][0]["id"]

|

||||

assert task_id is not None

|

||||

|

||||

response = client.put(

|

||||

f"/v1.0/tasks/{process_instance_id}/{task_id}",

|

||||

headers=self.logged_in_headers(finance_user),

|

||||

)

|

||||

assert response.status_code == 500

|

||||

assert response.json

|

||||

assert "UserDoesNotHaveAccessToTaskError" in response.json["message"]

|

||||

|

||||

response = client.put(

|

||||

f"/v1.0/tasks/{process_instance_id}/{task_id}",

|

||||

headers=self.logged_in_headers(initiator_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

|

||||

response = client.get(

|

||||

"/v1.0/tasks",

|

||||

headers=self.logged_in_headers(initiator_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

assert response.json is not None

|

||||

assert len(response.json["results"]) == 0

|

||||

|

||||

response = client.get(

|

||||

"/v1.0/tasks",

|

||||

headers=self.logged_in_headers(finance_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

assert response.json is not None

|

||||

assert len(response.json["results"]) == 1

|

||||

|

||||

def test_task_save_draft(

|

||||

self,

|

||||

app: Flask,

|

||||

client: FlaskClient,

|

||||

with_db_and_bpmn_file_cleanup: None,

|

||||

with_super_admin_user: UserModel,

|

||||

) -> None:

|

||||

process_group_id = "test_group"

|

||||

process_model_id = "simple_form"

|

||||

process_model = self.create_group_and_model_with_bpmn(

|

||||

client,

|

||||

with_super_admin_user,

|

||||

process_group_id=process_group_id,

|

||||

process_model_id=process_model_id,

|

||||

)

|

||||

|

||||

response = self.create_process_instance_from_process_model_id_with_api(

|

||||

client,

|

||||

process_model.id,

|

||||

headers=self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

assert response.status_code == 201

|

||||

|

||||

assert response.json is not None

|

||||

process_instance_id = response.json["id"]

|

||||

response = client.post(

|

||||

f"/v1.0/process-instances/{self.modify_process_identifier_for_path_param(process_model.id)}/{process_instance_id}/run",

|

||||

headers=self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

|

||||

response = client.get(

|

||||

"/v1.0/tasks",

|

||||

headers=self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

assert response.json is not None

|

||||

assert len(response.json["results"]) == 1

|

||||

|

||||

task_id = response.json["results"][0]["id"]

|

||||

assert task_id is not None

|

||||

|

||||

draft_data = {"HEY": "I'm draft"}

|

||||

|

||||

response = client.post(

|

||||

f"/v1.0/tasks/{process_instance_id}/{task_id}/save-draft",

|

||||

headers=self.logged_in_headers(with_super_admin_user),

|

||||

content_type="application/json",

|

||||

data=json.dumps(draft_data),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

|

||||

response = client.get(

|

||||

f"/v1.0/tasks/{process_instance_id}/{task_id}",

|

||||

headers=self.logged_in_headers(with_super_admin_user),

|

||||

)

|

||||

assert response.status_code == 200

|

||||

assert response.json is not None

|

||||

assert response.json["saved_form_data"] == draft_data

|

||||

@ -27,9 +27,9 @@ class TestPermissions(BaseTest):

|

||||

) -> None:

|

||||

process_group_id = "group-a"

|

||||

load_test_spec(

|

||||

"group-a/timers_intermediate_catch_event",

|

||||

bpmn_file_name="timers_intermediate_catch_event.bpmn",

|

||||

process_model_source_directory="timers_intermediate_catch_event",

|

||||

"group-a/timer_intermediate_catch_event",

|

||||

bpmn_file_name="timer_intermediate_catch_event.bpmn",

|

||||

process_model_source_directory="timer_intermediate_catch_event",

|

||||

)

|

||||

dan = self.find_or_create_user()

|

||||

principal = dan.principal

|

||||

@ -53,12 +53,15 @@ class TestPermissions(BaseTest):

|

||||

process_group_ids = ["group-a", "group-b"]

|

||||

process_group_a_id = process_group_ids[0]

|

||||

process_group_b_id = process_group_ids[1]

|

||||

for process_group_id in process_group_ids:

|

||||

load_test_spec(

|

||||

f"{process_group_id}/timers_intermediate_catch_event",

|

||||

bpmn_file_name="timers_intermediate_catch_event",

|

||||

process_model_source_directory="timers_intermediate_catch_event",

|

||||

)

|

||||

load_test_spec(

|

||||

f"{process_group_a_id}/timer_intermediate_catch_event",

|

||||

bpmn_file_name="timer_intermediate_catch_event",

|

||||

process_model_source_directory="timer_intermediate_catch_event",

|

||||

)

|

||||

load_test_spec(

|

||||

f"{process_group_b_id}/hello_world",

|

||||

process_model_source_directory="hello_world",

|

||||

)

|

||||

group_a_admin = self.find_or_create_user()

|

||||

|

||||

permission_target = PermissionTargetModel(uri=f"/{process_group_a_id}")

|

||||

@ -80,12 +83,15 @@ class TestPermissions(BaseTest):

|

||||

def test_user_can_be_granted_access_through_a_group(self, app: Flask, with_db_and_bpmn_file_cleanup: None) -> None:

|

||||

process_group_ids = ["group-a", "group-b"]

|

||||

process_group_a_id = process_group_ids[0]

|

||||

for process_group_id in process_group_ids:

|

||||

load_test_spec(

|

||||

f"{process_group_id}/timers_intermediate_catch_event",

|

||||

bpmn_file_name="timers_intermediate_catch_event.bpmn",

|

||||

process_model_source_directory="timers_intermediate_catch_event",

|

||||

)

|

||||

load_test_spec(

|

||||

f"{process_group_a_id}/timer_intermediate_catch_event",

|

||||

bpmn_file_name="timer_intermediate_catch_event",

|

||||

process_model_source_directory="timer_intermediate_catch_event",

|

||||

)

|

||||

load_test_spec(

|

||||

f"{process_group_ids[1]}/hello_world",

|

||||

process_model_source_directory="hello_world",

|

||||

)

|

||||

user = self.find_or_create_user()

|

||||

group = GroupModel(identifier="groupA")

|

||||

db.session.add(group)

|

||||

@ -118,12 +124,15 @@ class TestPermissions(BaseTest):

|

||||

process_group_ids = ["group-a", "group-b"]

|

||||

process_group_a_id = process_group_ids[0]

|

||||

process_group_b_id = process_group_ids[1]

|

||||

for process_group_id in process_group_ids:

|

||||

load_test_spec(

|

||||

f"{process_group_id}/timers_intermediate_catch_event",

|

||||

bpmn_file_name="timers_intermediate_catch_event.bpmn",

|

||||

process_model_source_directory="timers_intermediate_catch_event",

|

||||

)

|

||||

load_test_spec(

|

||||

f"{process_group_a_id}/timer_intermediate_catch_event",

|

||||

bpmn_file_name="timer_intermediate_catch_event",

|

||||

process_model_source_directory="timer_intermediate_catch_event",

|

||||

)

|

||||

load_test_spec(

|

||||

f"{process_group_b_id}/hello_world",

|

||||

process_model_source_directory="hello_world",

|

||||

)

|

||||

group_a_admin = self.find_or_create_user()

|

||||

|

||||

permission_target = PermissionTargetModel(uri="/%")

|

||||

|

||||

@ -167,11 +167,6 @@ class TestTaskService(BaseTest):

|

||||

process_model_source_directory="signal_event_extensions",

|

||||

bpmn_file_name="signal_event_extensions",

|

||||

)

|

||||

load_test_spec(

|

||||

"test_group/SpiffCatchEventExtensions",

|

||||

process_model_source_directory="call_activity_nested",

|

||||

bpmn_file_name="SpiffCatchEventExtensions",

|

||||

)

|

||||

process_instance = self.create_process_instance_from_process_model(process_model)

|

||||

processor = ProcessInstanceProcessor(process_instance)

|

||||

processor.do_engine_steps(save=True, execution_strategy_name="greedy")

|

||||

|

||||

@ -3,6 +3,7 @@ import {

|

||||

isInteger,

|

||||

slugifyString,

|

||||

underscorizeString,

|

||||

recursivelyChangeNullAndUndefined,

|

||||

} from './helpers';

|

||||

|

||||

test('it can slugify a string', () => {

|

||||

@ -29,3 +30,72 @@ test('it can validate numeric values', () => {

|

||||

expect(isInteger('1 2')).toEqual(false);

|

||||

expect(isInteger(2)).toEqual(true);

|

||||

});

|

||||

|

||||

test('it can replace undefined values in object with null', () => {

|

||||

const sampleData = {

|

||||

talentType: 'foo',

|

||||

rating: 'bar',

|

||||

contacts: {

|

||||

gmeets: undefined,

|

||||

zoom: undefined,

|

||||

phone: 'baz',

|

||||

awesome: false,

|

||||

info: '',

|

||||

},

|

||||

items: [

|

||||

undefined,

|

||||

{

|

||||

contacts: {

|

||||

gmeets: undefined,

|

||||

zoom: undefined,

|

||||

phone: 'baz',

|

||||

},

|

||||

},

|

||||

'HEY',

|

||||

],

|

||||

undefined,

|

||||

};

|

||||

|

||||

expect((sampleData.items[1] as any).contacts.zoom).toEqual(undefined);

|

||||

|

||||

const result = recursivelyChangeNullAndUndefined(sampleData, null);

|

||||

expect(result).toEqual(sampleData);

|

||||

expect(result.items[1].contacts.zoom).toEqual(null);

|

||||

expect(result.items[2]).toEqual('HEY');

|

||||

expect(result.contacts.awesome).toEqual(false);

|

||||

expect(result.contacts.info).toEqual('');

|

||||

});

|

||||

|

||||

test('it can replace null values in object with undefined', () => {

|

||||

const sampleData = {

|

||||

talentType: 'foo',

|

||||

rating: 'bar',

|

||||

contacts: {

|

||||

gmeets: null,

|

||||

zoom: null,

|

||||

phone: 'baz',

|

||||

awesome: false,

|

||||

info: '',

|

||||

},

|

||||

items: [

|

||||

null,

|

||||

{

|

||||

contacts: {

|

||||

gmeets: null,

|

||||

zoom: null,

|

||||

phone: 'baz',

|

||||

},

|

||||

},

|

||||

'HEY',

|

||||

],

|

||||

};

|

||||

|

||||

expect((sampleData.items[1] as any).contacts.zoom).toEqual(null);

|

||||

|

||||

const result = recursivelyChangeNullAndUndefined(sampleData, undefined);

|

||||

expect(result).toEqual(sampleData);

|

||||

expect(result.items[1].contacts.zoom).toEqual(undefined);

|

||||

expect(result.items[2]).toEqual('HEY');

|

||||

expect(result.contacts.awesome).toEqual(false);

|

||||

expect(result.contacts.info).toEqual('');

|

||||

});

|

||||

|

||||

@ -11,6 +11,10 @@ import {

|

||||

DEFAULT_PAGE,

|

||||

} from './components/PaginationForTable';

|

||||

|

||||

export const doNothing = () => {

|

||||

return undefined;

|

||||

};

|

||||

|

||||

// https://www.30secondsofcode.org/js/s/slugify

|

||||

export const slugifyString = (str: any) => {

|

||||

return str

|

||||

@ -26,6 +30,24 @@ export const underscorizeString = (inputString: string) => {

|

||||

return slugifyString(inputString).replace(/-/g, '_');

|

||||

};

|

||||

|

||||

export const recursivelyChangeNullAndUndefined = (obj: any, newValue: any) => {

|

||||

if (obj === null || obj === undefined) {

|

||||

return newValue;

|

||||

}

|

||||

if (Array.isArray(obj)) {

|

||||

obj.forEach((value: any, index: number) => {

|

||||

// eslint-disable-next-line no-param-reassign

|

||||

obj[index] = recursivelyChangeNullAndUndefined(value, newValue);

|

||||

});

|

||||

} else if (typeof obj === 'object') {

|

||||

Object.entries(obj).forEach(([key, value]) => {

|

||||

// eslint-disable-next-line no-param-reassign

|

||||

obj[key] = recursivelyChangeNullAndUndefined(value, newValue);

|

||||

});

|

||||

}

|

||||

return obj;

|

||||

};

|

||||

|

||||

export const selectKeysFromSearchParams = (obj: any, keys: string[]) => {

|

||||

const newSearchParams: { [key: string]: string } = {};

|

||||

keys.forEach((key: string) => {

|

||||

|

||||

@ -67,6 +67,8 @@ export interface Task {

|

||||

form_schema: any;

|

||||

form_ui_schema: any;

|

||||

signal_buttons: SignalButton[];

|

||||

|

||||

saved_form_data?: any;

|

||||

}

|

||||

|

||||

export interface ProcessInstanceTask {

|

||||

|

||||

@ -12,10 +12,15 @@ import {

|

||||

ButtonSet,

|

||||

} from '@carbon/react';

|

||||

|

||||

import { useDebouncedCallback } from 'use-debounce';

|

||||

import { Form } from '../rjsf/carbon_theme';

|

||||

import HttpService from '../services/HttpService';

|

||||

import useAPIError from '../hooks/UseApiError';

|

||||

import { modifyProcessIdentifierForPathParam } from '../helpers';

|

||||

import {

|

||||

doNothing,

|

||||

modifyProcessIdentifierForPathParam,

|

||||

recursivelyChangeNullAndUndefined,

|

||||

} from '../helpers';

|

||||

import { EventDefinition, Task } from '../interfaces';

|

||||

import ProcessBreadcrumb from '../components/ProcessBreadcrumb';

|

||||

import InstructionsForEndUser from '../components/InstructionsForEndUser';

|

||||

@ -27,7 +32,6 @@ export default function TaskShow() {

|

||||

const params = useParams();

|

||||

const navigate = useNavigate();

|

||||

const [disabled, setDisabled] = useState(false);

|

||||

const [noValidate, setNoValidate] = useState<boolean>(false);

|

||||

|

||||

const [taskData, setTaskData] = useState<any>(null);

|

||||

|

||||

@ -46,7 +50,10 @@ export default function TaskShow() {

|

||||

useEffect(() => {

|

||||

const processResult = (result: Task) => {

|

||||

setTask(result);

|

||||

setTaskData(result.data);

|

||||

|

||||

// convert null back to undefined so rjsf doesn't attempt to incorrectly validate them

|

||||

const taskDataToUse = result.saved_form_data || result.data;

|

||||

setTaskData(recursivelyChangeNullAndUndefined(taskDataToUse, undefined));

|

||||

setDisabled(false);

|

||||

if (!result.can_complete) {

|

||||

navigateToInterstitial(result);

|

||||

@ -83,6 +90,28 @@ export default function TaskShow() {

|

||||

// eslint-disable-next-line react-hooks/exhaustive-deps

|

||||

}, [params]);

|

||||

|

||||

// Before we auto-saved form data, we remembered what data was in the form, and then created a synthetic submit event

|

||||

// in order to implement a "Save and close" button. That button no longer saves (since we have auto-save), but the crazy

|

||||

// frontend code to support that Save and close button is here, in case we need to reference that someday:

|

||||

// https://github.com/sartography/spiff-arena/blob/182f56a1ad23ce780e8f5b0ed00efac3e6ad117b/spiffworkflow-frontend/src/routes/TaskShow.tsx#L329

|

||||

const autoSaveTaskData = (formData: any) => {

|

||||

HttpService.makeCallToBackend({

|

||||

path: `/tasks/${params.process_instance_id}/${params.task_id}/save-draft`,

|

||||

postBody: formData,

|

||||

httpMethod: 'POST',

|

||||

successCallback: doNothing,

|

||||

failureCallback: addError,

|

||||

});

|

||||

};

|

||||

|

||||

const addDebouncedTaskDataAutoSave = useDebouncedCallback(

|

||||

(value: string) => {

|

||||

autoSaveTaskData(value);

|

||||

},

|

||||

// delay in ms

|

||||

1000

|

||||

);

|

||||

|

||||

const processSubmitResult = (result: any) => {

|

||||

removeError();

|

||||

if (result.ok) {

|

||||

@ -108,20 +137,16 @@ export default function TaskShow() {

|

||||

navigate(`/tasks`);

|

||||

return;

|

||||

}

|

||||

let queryParams = '';

|

||||

const queryParams = '';

|

||||

|

||||

// if validations are turned off then save as draft

|

||||

if (noValidate) {

|

||||

queryParams = '?save_as_draft=true';

|

||||

}

|

||||

setDisabled(true);

|

||||

removeError();

|

||||

delete dataToSubmit.isManualTask;

|

||||

|

||||

// NOTE: rjsf sets blanks values to undefined and JSON.stringify removes keys with undefined values

|

||||

// so there is no way to clear out a field that previously had a value.

|

||||

// To resolve this, we could potentially go through the object that we are posting (either in here or in

|

||||

// HttpService) and translate all undefined values to null.

|

||||

// so we convert undefined values to null recursively so that we can unset values in form fields

|

||||

recursivelyChangeNullAndUndefined(dataToSubmit, null);

|

||||

|

||||

HttpService.makeCallToBackend({

|

||||

path: `/tasks/${params.process_instance_id}/${params.task_id}${queryParams}`,

|

||||

successCallback: processSubmitResult,

|

||||

@ -323,16 +348,8 @@ export default function TaskShow() {

|

||||

return errors;

|

||||

};

|

||||

|

||||

// This turns off validations and then dispatches the click event after

|

||||

// waiting a second to give the state time to update.

|

||||

// This is to allow saving the form without validations causing issues.

|

||||

const handleSaveAndCloseButton = () => {

|

||||

setNoValidate(true);

|

||||

setTimeout(() => {

|

||||

(document.getElementById('our-very-own-form') as any).dispatchEvent(

|

||||

new Event('submit', { cancelable: true, bubbles: true })

|

||||

);

|

||||

}, 1000);

|

||||

const handleCloseButton = () => {

|

||||

navigate(`/tasks`);

|

||||

};

|

||||

|

||||

const formElement = () => {

|

||||

@ -384,12 +401,12 @@ export default function TaskShow() {

|

||||

closeButton = (

|

||||

<Button

|

||||

id="close-button"

|

||||

onClick={handleSaveAndCloseButton}

|

||||

onClick={handleCloseButton}

|

||||

disabled={disabled}

|

||||

kind="secondary"

|

||||

title="Save changes without submitting."

|

||||

>

|

||||

Save and Close

|

||||

Close

|

||||

</Button>

|

||||

);

|

||||

}

|

||||

@ -427,14 +444,16 @@ export default function TaskShow() {

|

||||

id="our-very-own-form"

|

||||

disabled={disabled}

|

||||

formData={taskData}

|

||||

onChange={(obj: any) => setTaskData(obj.formData)}

|

||||

onChange={(obj: any) => {

|

||||

setTaskData(obj.formData);

|

||||

addDebouncedTaskDataAutoSave(obj.formData);

|

||||

}}

|

||||

onSubmit={handleFormSubmit}

|

||||

schema={jsonSchema}

|

||||

uiSchema={formUiSchema}

|

||||

widgets={widgets}

|

||||

validator={validator}

|

||||

customValidate={customValidate}

|

||||

noValidate={noValidate}

|

||||

omitExtraData

|

||||

>

|

||||

{reactFragmentToHideSubmitButton}

|

||||

|

||||