refactor: Complete rewrite of library to use mythxjs

v2.0.0 (2020-04-02) Bug Fixes issues: Fixed issue list not matching the list of issues in the MythX dashboard. sources: Fixed an issue where we no longer need to send all compiled contracts (that may be mutually exclusive) to each MythX analysis. Features libs: Now using mythxjs instead of armlet (deprecated) to communicate with the MythX API. refactor: Complete refactor, with many of the changes focussing on basing off sabre. BREAKING CHANGES The --full CLI option is now obsolete and will no have any effect. Please use --mode full instead. Authentication to the MythX service now requires that the MYTHX_API_KEY environment variable is set, either in a .env file located in your project's root, or directly in an environment variable.

This commit is contained in:

parent

1501504bae

commit

71ca63b5a0

|

|

@ -0,0 +1,42 @@

|

|||

name: CI

|

||||

on: [push]

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Begin CI...

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Use Node 12

|

||||

uses: actions/setup-node@v1

|

||||

with:

|

||||

node-version: 12.x

|

||||

|

||||

- name: Use cached node_modules

|

||||

uses: actions/cache@v1

|

||||

with:

|

||||

path: node_modules

|

||||

key: nodeModules-${{ hashFiles('**/yarn.lock') }}

|

||||

restore-keys: |

|

||||

nodeModules-

|

||||

|

||||

- name: Install dependencies

|

||||

run: yarn install --frozen-lockfile

|

||||

env:

|

||||

CI: true

|

||||

|

||||

- name: Lint

|

||||

run: yarn lint

|

||||

env:

|

||||

CI: true

|

||||

|

||||

- name: Test

|

||||

run: yarn test --ci --coverage --maxWorkers=2

|

||||

env:

|

||||

CI: true

|

||||

|

||||

- name: Build

|

||||

run: yarn build

|

||||

env:

|

||||

CI: true

|

||||

|

|

@ -1,2 +1,4 @@

|

|||

node_modules/

|

||||

|

||||

*.log

|

||||

.DS_Store

|

||||

node_modules

|

||||

dist

|

||||

|

|

|

|||

|

|

@ -0,0 +1,4 @@

|

|||

engine-strict = true

|

||||

package-lock = false

|

||||

save-exact = true

|

||||

scripts-prepend-node-path = true

|

||||

|

|

@ -0,0 +1,3 @@

|

|||

--*.scripts-prepend-node-path true

|

||||

--install.check-files true

|

||||

--install.network-timeout 600000

|

||||

|

|

@ -0,0 +1,21 @@

|

|||

# Change log

|

||||

# [2.0.0](https://github.com/embarklabs/embark-mythx/compare/v2.0.0...v1.0.3) (2020-04-02)

|

||||

|

||||

|

||||

### Bug Fixes

|

||||

|

||||

* **issues:** Fixed issue list not matching the list of issues in the MythX dashboard.

|

||||

* **sources:** Fixed an issue where we no longer need to send all compiled contracts (that may be mutually exclusive) to each MythX analysis.

|

||||

|

||||

### Features

|

||||

|

||||

* **libs:** Now using [`mythxjs`](https://github.com/ConsenSys/mythxjs) instead of `armlet` (deprecated) to communicate with the MythX API.

|

||||

* **refactor:** Complete refactor, with many of the changes focussing on basing off [`sabre`](https://github.com/b-mueller/sabre).

|

||||

|

||||

|

||||

### BREAKING CHANGES

|

||||

|

||||

* The `--full` CLI option is now obsolete and will no have any effect. Please use `--mode full` instead.

|

||||

* Authentication to the MythX service now requires that the MYTHX_API_KEY environment variable is set, either in a `.env` file located in your project's root, or directly in an environment variable.

|

||||

|

||||

[bug]: https://github.com/ethereum/web3.js/issues/3283

|

||||

2

LICENSE

2

LICENSE

|

|

@ -1,6 +1,6 @@

|

|||

MIT License

|

||||

|

||||

Copyright (c) 2019 Flex Dapps

|

||||

Copyright (c) 2020 Status.im

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

|

|

|

|||

111

README.md

111

README.md

|

|

@ -1,22 +1,24 @@

|

|||

|

||||

# Status Embark plugin for MythX

|

||||

|

||||

|

||||

[](https://github.com/flex-dapps/embark-mythx/blob/master/LICENSE)

|

||||

[](https://github.com/embarklabs/embark-mythx/blob/master/LICENSE)

|

||||

|

||||

|

||||

# Status Embark plugin for MythX.

|

||||

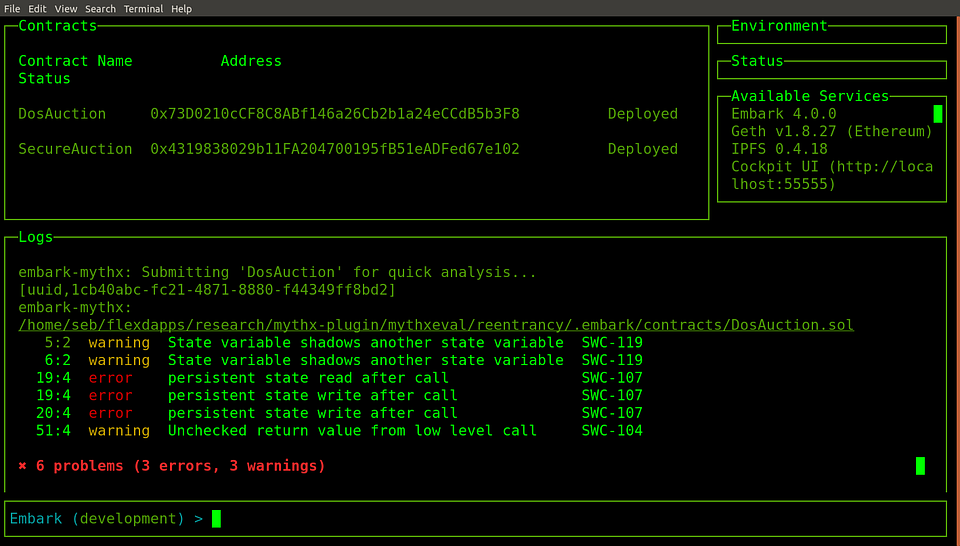

This plugin brings MythX to Status Embark. Simply call verify from the Embark console and embark-mythx sends your contracts off for analysis. It is inspired by [sabre](https://github.com/b-mueller/sabre) and uses its source mapping and reporting functions.

|

||||

|

||||

This plugin brings MythX to Status Embark. Simply call `verify` from the Embark console and `embark-mythx` sends your contracts off for analysis. It is inspired by `truffle-security` and uses its source mapping and reporting functions.

|

||||

This project was bootstrapped with [TSDX](https://github.com/jaredpalmer/tsdx).

|

||||

|

||||

## QuickStart

|

||||

|

||||

1. Create a `.env` file in the root of your project and provide your MythX login information. Free MythX accounts can be created at https://dashboard.mythx.io/#/registration.

|

||||

1. Create a `.env` file in the root of your project and provide your MythX API Key. Free MythX accounts can be created at https://dashboard.mythx.io/#/registration. Once an account is created, generate an API key at https://dashboard.mythx.io/#/console/tools.

|

||||

|

||||

```json

|

||||

MYTHX_USERNAME="<mythx-username>"

|

||||

MYTHX_PASSWORD="<password>"

|

||||

MYTHX_API_KEY="<mythx-api-key>"

|

||||

```

|

||||

|

||||

> **NOTE:** `MYTHX_ETH_ADDRESS` has been deprecated in favour of `MYTHX_USERNAME` and will be removed in future versions. Please update your .env file or your environment variables accordingly.

|

||||

> **NOTE:** `MYTHX_ETH_ADDRESS` has been deprecated in favour of `MYTHX_USERNAME` and will be removed in future versions. As of version 2.0, `MYTHX_API_KEY` is also required. Please update your .env file or your environment variables accordingly.

|

||||

|

||||

`MYTHX_USERNAME` may be either of:

|

||||

* MythX User ID (assigned by MythX API to any registered user);

|

||||

|

|

@ -29,20 +31,38 @@ For more information, please see the [MythX API Login documentation](https://api

|

|||

|

||||

```bash

|

||||

Embark (development) > verify

|

||||

embark-mythx: Running MythX analysis in background.

|

||||

embark-mythx: Submitting 'ERC20' for analysis...

|

||||

embark-mythx: Submitting 'SafeMath' for analysis...

|

||||

embark-mythx: Submitting 'Ownable' for analysis...

|

||||

Authenticating MythX user...

|

||||

Running MythX analysis...

|

||||

Analysis job submitted: https://dashboard.mythx.io/#/console/analyses/9a294be9-8656-416a-afbc-06cb299f5319

|

||||

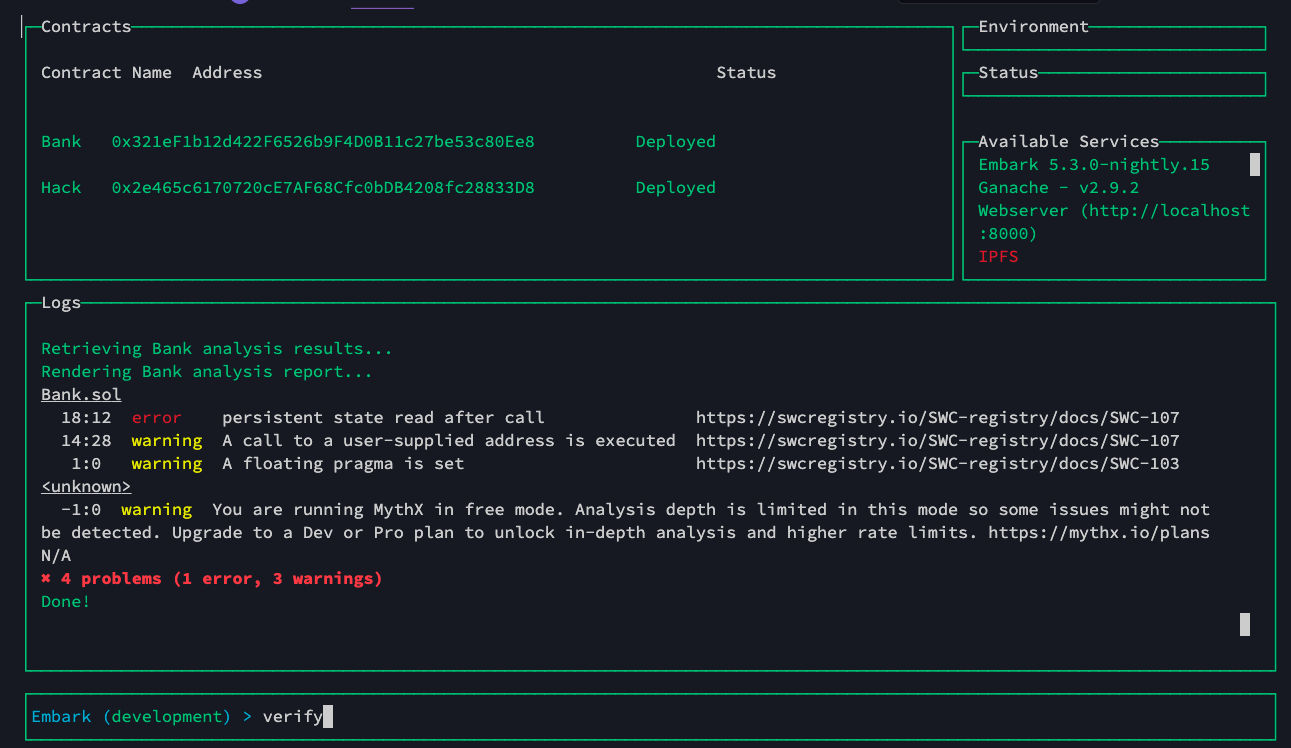

Analyzing Bank in quick mode...

|

||||

Analysis job submitted: https://dashboard.mythx.io/#/console/analyses/0741a098-6b81-43dc-af06-0416eda2a076

|

||||

Analyzing Hack in quick mode...

|

||||

Retrieving Bank analysis results...

|

||||

Retrieving Hack analysis results...

|

||||

Rendering Bank analysis report...

|

||||

|

||||

embark-mythx:

|

||||

/home/flex/mythx-plugin/testToken/.embark/contracts/ERC20.sol

|

||||

1:0 warning A floating pragma is set SWC-103

|

||||

Bank.sol

|

||||

18:12 error persistent state read after call https://swcregistry.io/SWC-registry/docs/SWC-107

|

||||

14:28 warning A call to a user-supplied address is executed https://swcregistry.io/SWC-registry/docs/SWC-107

|

||||

1:0 warning A floating pragma is set https://swcregistry.io/SWC-registry/docs/SWC-103

|

||||

|

||||

✖ 1 problem (0 errors, 1 warning)

|

||||

<unknown>

|

||||

-1:0 warning You are running MythX in free mode. Analysis depth is limited in this mode so some issues might not be detected. Upgrade to a Dev or Pro plan to unlock in-depth analysis and higher rate limits. https://mythx.io/plans N/A

|

||||

|

||||

embark-mythx: MythX analysis found vulnerabilities.

|

||||

✖ 4 problems (1 error, 3 warnings)

|

||||

|

||||

Rendering Hack analysis report...

|

||||

|

||||

Hack.sol

|

||||

1:0 warning A floating pragma is set https://swcregistry.io/SWC-registry/docs/SWC-103

|

||||

|

||||

<unknown>

|

||||

-1:0 warning You are running MythX in free mode. Analysis depth is limited in this mode so some issues might not be detected. Upgrade to a Dev or Pro plan to unlock in-depth analysis and higher rate limits. https://mythx.io/plans N/A

|

||||

|

||||

✖ 2 problems (0 errors, 2 warnings)

|

||||

|

||||

Done!

|

||||

```

|

||||

|

||||

## Installation

|

||||

|

||||

0. Install this plugin from the root of your Embark project:

|

||||

|

|

@ -64,22 +84,33 @@ $ npm i flex-dapps/embark-mythx

|

|||

```

|

||||

|

||||

## Usage

|

||||

The following usage guide can also be obtained by running `verify help` in the Embark console.

|

||||

|

||||

```bash

|

||||

verify [--full] [--debug] [--limit] [--initial-delay] [<contracts>]

|

||||

verify status <uuid>

|

||||

verify help

|

||||

Available Commands

|

||||

|

||||

Options:

|

||||

--full, -f Perform full instead of quick analysis (not available on free MythX tier).

|

||||

--debug, -d Additional debug output.

|

||||

--limit, -l Maximum number of concurrent analyses.

|

||||

--initial-delay, -i Time in seconds before first analysis status check.

|

||||

verify <options> [contracts] Runs MythX verification. If array of contracts are specified, only those contracts will be analysed.

|

||||

verify report [--format] uuid Get the report of a completed analysis.

|

||||

verify status uuid Get the status of an already submitted analysis.

|

||||

verify list Displays a list of the last 20 submitted analyses in a table.

|

||||

verify help Display this usage guide.

|

||||

|

||||

[<contracts>] List of contracts to submit for analysis (default: all).

|

||||

status <uuid> Retrieve analysis status for given MythX UUID.

|

||||

help This help.

|

||||

Examples

|

||||

|

||||

verify --mode full SimpleStorage ERC20 Runs a full MythX verification for the SimpleStorage and ERC20 contracts only.

|

||||

verify status 0d60d6b3-e226-4192-b9c6-66b45eca3746 Gets the status of the MythX analysis with the specified uuid.

|

||||

verify report --format stylish 0d60d6b3-e226-4192-b9c6-66b45eca3746 Gets the status of the MythX analysis with the specified uuid.

|

||||

|

||||

Verify options

|

||||

|

||||

-m, --mode string Analysis mode. Options: quick, standard, deep (default: quick).

|

||||

-o, --format string Output format. Options: text, stylish, compact, table, html, json (default:

|

||||

stylish).

|

||||

-c, --no-cache-lookup Deactivate MythX cache lookups (default: false).

|

||||

-d, --debug Print MythX API request and response.

|

||||

-l, --limit number Maximum number of concurrent analyses (default: 10).

|

||||

--timeout number Timeout in secs to wait for analysis to finish (default: smart default based

|

||||

on mode).

|

||||

```

|

||||

|

||||

### Example Usage

|

||||

|

|

@ -93,4 +124,28 @@ $ verify ERC20 Ownable --full

|

|||

|

||||

# Check status of previous or ongoing analysis

|

||||

$ verify status ef5bb083-c57a-41b0-97c1-c14a54617812

|

||||

```

|

||||

```

|

||||

|

||||

## `embark-mythx` Development

|

||||

|

||||

Contributions are very welcome! If you'd like to contribute, the following commands will help you get up and running. The library was built using [TSDX](https://github.com/jaredpalmer/tsdx), so these commands are specific to TSDX.

|

||||

|

||||

### `npm run start` or `yarn start`

|

||||

|

||||

Runs the project in development/watch mode. `embark-mythx` will be rebuilt upon changes. TSDX has a special logger for you convenience. Error messages are pretty printed and formatted for compatibility VS Code's Problems tab.

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/4060187/52168303-574d3a00-26f6-11e9-9f3b-71dbec9ebfcb.gif" width="600" />

|

||||

|

||||

Your library will be rebuilt if you make edits.

|

||||

|

||||

### `npm run build` or `yarn build`

|

||||

|

||||

Bundles the package to the `dist` folder.

|

||||

The package is optimized and bundled with Rollup into multiple formats (CommonJS, UMD, and ES Module).

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/4060187/52168322-a98e5b00-26f6-11e9-8cf6-222d716b75ef.gif" width="600" />

|

||||

|

||||

### `npm test` or `yarn test`

|

||||

|

||||

Runs the test watcher (Jest) in an interactive mode.

|

||||

By default, runs tests related to files changed since the last commit.

|

||||

|

|

|

|||

|

|

@ -1,56 +0,0 @@

|

|||

'use strict';

|

||||

/* This is modified from remix-lib/astWalker.js to use the newer solc AST format

|

||||

*/

|

||||

|

||||

/**

|

||||

* Crawl the given AST through the function walk(ast, callback)

|

||||

*/

|

||||

function AstWalker () {

|

||||

}

|

||||

|

||||

/**

|

||||

* visit all the AST nodes

|

||||

*

|

||||

* @param {Object} ast - AST node

|

||||

* @param {Object or Function} callback - if (Function) the function will be called for every node.

|

||||

* - if (Object) callback[<Node Type>] will be called for

|

||||

* every node of type <Node Type>. callback["*"] will be called fo all other nodes.

|

||||

* in each case, if the callback returns false it does not descend into children.

|

||||

* If no callback for the current type, children are visited.

|

||||

*/

|

||||

AstWalker.prototype.walk = function (ast, callback) {

|

||||

if (callback instanceof Function) {

|

||||

callback = {'*': callback};

|

||||

}

|

||||

if (!('*' in callback)) {

|

||||

callback['*'] = function () { return true; };

|

||||

}

|

||||

if (manageCallBack(ast, callback) && ast.nodes && ast.nodes.length > 0) {

|

||||

for (const child of ast.nodes) {

|

||||

this.walk(child, callback);

|

||||

}

|

||||

}

|

||||

};

|

||||

|

||||

/**

|

||||

* walk the given @astList

|

||||

*

|

||||

* @param {Object} sourcesList - sources list (containing root AST node)

|

||||

* @param {Function} - callback used by AstWalker to compute response

|

||||

*/

|

||||

AstWalker.prototype.walkAstList = function (sourcesList, callback) {

|

||||

const walker = new AstWalker();

|

||||

for (const source of sourcesList) {

|

||||

walker.walk(source.ast, callback);

|

||||

}

|

||||

};

|

||||

|

||||

function manageCallBack (node, callback) {

|

||||

if (node.nodeType in callback) {

|

||||

return callback[node.nodeType](node);

|

||||

} else {

|

||||

return callback['*'](node);

|

||||

}

|

||||

}

|

||||

|

||||

module.exports = AstWalker;

|

||||

|

|

@ -1,231 +0,0 @@

|

|||

/***

|

||||

This is modified from remix-lib/src/sourceMappingDecoder.js

|

||||

|

||||

The essential difference is that remix-lib uses legacyAST and we

|

||||

use ast instead. legacyAST has field "children" while ast

|

||||

renames this to "nodes".

|

||||

***/

|

||||

|

||||

'use strict';

|

||||

var util = require('remix-lib/src/util');

|

||||

var AstWalker = require('./astWalker');

|

||||

|

||||

/**

|

||||

* Decompress the source mapping given by solc-bin.js

|

||||

*/

|

||||

function SourceMappingDecoder () {

|

||||

// s:l:f:j

|

||||

}

|

||||

|

||||

/**

|

||||

* get a list of nodes that are at the given @arg position

|

||||

*

|

||||

* @param {String} astNodeType - type of node to return

|

||||

* @param {Int} position - cursor position

|

||||

* @return {Object} ast object given by the compiler

|

||||

*/

|

||||

SourceMappingDecoder.prototype.nodesAtPosition = nodesAtPosition;

|

||||

|

||||

/**

|

||||

* Decode the source mapping for the given @arg index

|

||||

*

|

||||

* @param {Integer} index - source mapping index to decode

|

||||

* @param {String} mapping - compressed source mapping given by solc-bin

|

||||

* @return {Object} returns the decompressed source mapping for the given index {start, length, file, jump}

|

||||

*/

|

||||

SourceMappingDecoder.prototype.atIndex = atIndex;

|

||||

|

||||

/**

|

||||

* Decode the given @arg value

|

||||

*

|

||||

* @param {string} value - source location to decode ( should be start:length:file )

|

||||

* @return {Object} returns the decompressed source mapping {start, length, file}

|

||||

*/

|

||||

SourceMappingDecoder.prototype.decode = function (value) {

|

||||

if (value) {

|

||||

value = value.split(':');

|

||||

return {

|

||||

start: parseInt(value[0]),

|

||||

length: parseInt(value[1]),

|

||||

file: parseInt(value[2])

|

||||

};

|

||||

}

|

||||

};

|

||||

|

||||

/**

|

||||

* Decode the source mapping for the given compressed mapping

|

||||

*

|

||||

* @param {String} mapping - compressed source mapping given by solc-bin

|

||||

* @return {Array} returns the decompressed source mapping. Array of {start, length, file, jump}

|

||||

*/

|

||||

SourceMappingDecoder.prototype.decompressAll = function (mapping) {

|

||||

var map = mapping.split(';');

|

||||

var ret = [];

|

||||

for (var k in map) {

|

||||

var compressed = map[k].split(':');

|

||||

var sourceMap = {

|

||||

start: compressed[0] ? parseInt(compressed[0]) : ret[ret.length - 1].start,

|

||||

length: compressed[1] ? parseInt(compressed[1]) : ret[ret.length - 1].length,

|

||||

file: compressed[2] ? parseInt(compressed[2]) : ret[ret.length - 1].file,

|

||||

jump: compressed[3] ? compressed[3] : ret[ret.length - 1].jump

|

||||

};

|

||||

ret.push(sourceMap);

|

||||

}

|

||||

return ret;

|

||||

};

|

||||

|

||||

/**

|

||||

* Retrieve line/column position of each source char

|

||||

*

|

||||

* @param {String} source - contract source code

|

||||

* @return {Arrray} returns an array containing offset of line breaks

|

||||

*/

|

||||

SourceMappingDecoder.prototype.getLinebreakPositions = function (source) {

|

||||

var ret = [];

|

||||

for (var pos = source.indexOf('\n'); pos >= 0; pos = source.indexOf('\n', pos + 1)) {

|

||||

ret.push(pos);

|

||||

}

|

||||

return ret;

|

||||

};

|

||||

|

||||

/**

|

||||

* Retrieve the line/column position for the given source mapping

|

||||

*

|

||||

* @param {Object} sourceLocation - object containing attributes {source} and {length}

|

||||

* @param {Array} lineBreakPositions - array returned by the function 'getLinebreakPositions'

|

||||

* @return {Object} returns an object {start: {line, column}, end: {line, column}} (line/column count start at 0)

|

||||

*/

|

||||

SourceMappingDecoder.prototype.convertOffsetToLineColumn = function (sourceLocation, lineBreakPositions) {

|

||||

if (sourceLocation.start >= 0 && sourceLocation.length >= 0) {

|

||||

return {

|

||||

start: convertFromCharPosition(sourceLocation.start, lineBreakPositions),

|

||||

end: convertFromCharPosition(sourceLocation.start + sourceLocation.length, lineBreakPositions)

|

||||

};

|

||||

} else {

|

||||

return {

|

||||

start: null,

|

||||

end: null

|

||||

};

|

||||

}

|

||||

};

|

||||

|

||||

/**

|

||||

* Retrieve the first @arg astNodeType that include the source map at arg instIndex

|

||||

*

|

||||

* @param {String} astNodeType - node type that include the source map instIndex

|

||||

* @param {String} instIndex - instruction index used to retrieve the source map

|

||||

* @param {String} sourceMap - source map given by the compilation result

|

||||

* @param {Object} ast - ast given by the compilation result

|

||||

*/

|

||||

SourceMappingDecoder.prototype.findNodeAtInstructionIndex = findNodeAtInstructionIndex;

|

||||

SourceMappingDecoder.prototype.findNodeAtSourceLocation = findNodeAtSourceLocation;

|

||||

|

||||

function convertFromCharPosition (pos, lineBreakPositions) {

|

||||

var line = util.findLowerBound(pos, lineBreakPositions);

|

||||

if (lineBreakPositions[line] !== pos) {

|

||||

line += 1;

|

||||

}

|

||||

var beginColumn = line === 0 ? 0 : (lineBreakPositions[line - 1] + 1);

|

||||

var column = pos - beginColumn;

|

||||

return {

|

||||

line: line,

|

||||

column: column

|

||||

};

|

||||

}

|

||||

|

||||

function sourceLocationFromAstNode (astNode) {

|

||||

if (astNode.src) {

|

||||

var split = astNode.src.split(':');

|

||||

return {

|

||||

start: parseInt(split[0]),

|

||||

length: parseInt(split[1]),

|

||||

file: parseInt(split[2])

|

||||

};

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

function findNodeAtInstructionIndex (astNodeType, instIndex, sourceMap, ast) {

|

||||

var sourceLocation = atIndex(instIndex, sourceMap);

|

||||

return findNodeAtSourceLocation(astNodeType, sourceLocation, ast);

|

||||

}

|

||||

|

||||

function findNodeAtSourceLocation (astNodeType, sourceLocation, ast) {

|

||||

var astWalker = new AstWalker();

|

||||

var callback = {};

|

||||

var found = null;

|

||||

callback['*'] = function (node) {

|

||||

const nodeLocation = sourceLocationFromAstNode(node);

|

||||

if (!nodeLocation) {

|

||||

return true;

|

||||

}

|

||||

if (nodeLocation.start <= sourceLocation.start && nodeLocation.start + nodeLocation.length >= sourceLocation.start + sourceLocation.length) {

|

||||

if (astNodeType === node.nodeType) {

|

||||

found = node;

|

||||

return false;

|

||||

} else {

|

||||

return true;

|

||||

}

|

||||

} else {

|

||||

return false;

|

||||

}

|

||||

};

|

||||

astWalker.walk(ast, callback);

|

||||

return found;

|

||||

}

|

||||

|

||||

function nodesAtPosition (astNodeType, position, ast) {

|

||||

var astWalker = new AstWalker();

|

||||

var callback = {};

|

||||

var found = [];

|

||||

callback['*'] = function (node) {

|

||||

var nodeLocation = sourceLocationFromAstNode(node);

|

||||

if (!nodeLocation) {

|

||||

return;

|

||||

}

|

||||

if (nodeLocation.start <= position && nodeLocation.start + nodeLocation.length >= position) {

|

||||

if (!astNodeType || astNodeType === node.name) {

|

||||

found.push(node);

|

||||

if (astNodeType) return false;

|

||||

}

|

||||

return true;

|

||||

} else {

|

||||

return false;

|

||||

}

|

||||

};

|

||||

astWalker.walk(ast.ast, callback);

|

||||

return found;

|

||||

}

|

||||

|

||||

function atIndex (index, mapping) {

|

||||

var ret = {};

|

||||

var map = mapping.split(';');

|

||||

if (index >= map.length) {

|

||||

index = map.length - 1;

|

||||

}

|

||||

for (var k = index; k >= 0; k--) {

|

||||

var current = map[k];

|

||||

if (!current.length) {

|

||||

continue;

|

||||

}

|

||||

current = current.split(':');

|

||||

if (ret.start === undefined && current[0] && current[0] !== '-1' && current[0].length) {

|

||||

ret.start = parseInt(current[0]);

|

||||

}

|

||||

if (ret.length === undefined && current[1] && current[1] !== '-1' && current[1].length) {

|

||||

ret.length = parseInt(current[1]);

|

||||

}

|

||||

if (ret.file === undefined && current[2] && current[2] !== '-1' && current[2].length) {

|

||||

ret.file = parseInt(current[2]);

|

||||

}

|

||||

if (ret.jump === undefined && current[3] && current[3].length) {

|

||||

ret.jump = current[3];

|

||||

}

|

||||

if (ret.start !== undefined && ret.length !== undefined && ret.file !== undefined && ret.jump !== undefined) {

|

||||

break;

|

||||

}

|

||||

}

|

||||

return ret;

|

||||

}

|

||||

|

||||

module.exports = SourceMappingDecoder;

|

||||

|

|

@ -0,0 +1,170 @@

|

|||

const separator = '-'.repeat(20);

|

||||

const indent = ' '.repeat(4);

|

||||

|

||||

const roles = {

|

||||

creator: 'CREATOR',

|

||||

attacker: 'ATTACKER',

|

||||

other: 'USER'

|

||||

};

|

||||

|

||||

const textFormatter = {};

|

||||

|

||||

textFormatter.strToInt = str => parseInt(str, 10);

|

||||

|

||||

textFormatter.guessAccountRoleByAddress = address => {

|

||||

const prefix = address.toLowerCase().substr(0, 10);

|

||||

|

||||

if (prefix === '0xaffeaffe') {

|

||||

return roles.creator;

|

||||

} else if (prefix === '0xdeadbeef') {

|

||||

return roles.attacker;

|

||||

}

|

||||

|

||||

return roles.other;

|

||||

};

|

||||

|

||||

textFormatter.stringifyValue = value => {

|

||||

const type = typeof value;

|

||||

|

||||

if (type === 'number') {

|

||||

return String(value);

|

||||

} else if (type === 'string') {

|

||||

return value;

|

||||

} else if (value === null) {

|

||||

return 'null';

|

||||

}

|

||||

|

||||

return JSON.stringify(value);

|

||||

};

|

||||

|

||||

textFormatter.formatTestCaseSteps = (steps, fnHashes = {}) => {

|

||||

const output = [];

|

||||

|

||||

for (let s = 0, n = 0; s < steps.length; s++) {

|

||||

const step = steps[s];

|

||||

|

||||

/**

|

||||

* Empty address means "contract creation" transaction.

|

||||

*

|

||||

* Skip it to not spam.

|

||||

*/

|

||||

if (step.address === '') {

|

||||

continue;

|

||||

}

|

||||

|

||||

n++;

|

||||

|

||||

const type = textFormatter.guessAccountRoleByAddress(step.origin);

|

||||

|

||||

const fnHash = step.input.substr(2, 8);

|

||||

const fnName = fnHashes[fnHash] || step.name || '<N/A>';

|

||||

const fnDesc = `${fnName} [ ${fnHash} ]`;

|

||||

|

||||

output.push(

|

||||

`Tx #${n}:`,

|

||||

indent + `Origin: ${step.origin} [ ${type} ]`,

|

||||

indent + `Function: ${textFormatter.stringifyValue(fnDesc)}`,

|

||||

indent + `Calldata: ${textFormatter.stringifyValue(step.input)}`

|

||||

);

|

||||

|

||||

if ('decodedInput' in step) {

|

||||

output.push(`${indent}Decoded Calldata: ${step.decodedInput}`);

|

||||

}

|

||||

|

||||

output.push(

|

||||

`${indent}Value: ${textFormatter.stringifyValue(step.value)}`,

|

||||

''

|

||||

);

|

||||

}

|

||||

|

||||

return output.join('\n').trimRight();

|

||||

};

|

||||

|

||||

textFormatter.formatTestCase = (testCase, fnHashes) => {

|

||||

const output = [];

|

||||

|

||||

if (testCase.steps) {

|

||||

const content = textFormatter.formatTestCaseSteps(testCase.steps, fnHashes);

|

||||

|

||||

if (content) {

|

||||

output.push('Transaction Sequence:', '', content);

|

||||

}

|

||||

}

|

||||

|

||||

return output.length ? output.join('\n') : undefined;

|

||||

};

|

||||

|

||||

textFormatter.getCodeSample = (source, src) => {

|

||||

const [start, length] = src.split(':').map(textFormatter.strToInt);

|

||||

|

||||

return source.substr(start, length);

|

||||

};

|

||||

|

||||

textFormatter.formatLocation = message => {

|

||||

const start = `${message.line}:${message.column}`;

|

||||

const finish = `${message.endLine}:{message.endCol}`;

|

||||

|

||||

return `from ${start} to ${finish}`;

|

||||

};

|

||||

|

||||

textFormatter.formatMessage = (message, filePath, sourceCode, fnHashes) => {

|

||||

const { mythxIssue, mythxTextLocations } = message;

|

||||

const output = [];

|

||||

|

||||

output.push(

|

||||

`==== ${mythxIssue.swcTitle || 'N/A'} ====`,

|

||||

`Severity: ${mythxIssue.severity}`,

|

||||

`File: ${filePath}`

|

||||

);

|

||||

|

||||

if (message.ruleId !== 'N/A') {

|

||||

output.push(`Link: ${message.ruleId}`);

|

||||

}

|

||||

|

||||

output.push(

|

||||

separator,

|

||||

mythxIssue.description.head,

|

||||

mythxIssue.description.tail

|

||||

);

|

||||

|

||||

const code = mythxTextLocations.length

|

||||

? textFormatter.getCodeSample(sourceCode, mythxTextLocations[0].sourceMap)

|

||||

: undefined;

|

||||

|

||||

output.push(

|

||||

separator,

|

||||

`Location: ${textFormatter.formatLocation(message)}`,

|

||||

'',

|

||||

code || '<code not available>'

|

||||

);

|

||||

|

||||

const testCases = mythxIssue.extra && mythxIssue.extra.testCases;

|

||||

|

||||

if (testCases) {

|

||||

for (const testCase of testCases) {

|

||||

const content = textFormatter.formatTestCase(testCase, fnHashes);

|

||||

|

||||

if (content) {

|

||||

output.push(separator, content);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

return output.join('\n');

|

||||

};

|

||||

|

||||

textFormatter.formatResult = result => {

|

||||

const { filePath, sourceCode, functionHashes } = result;

|

||||

|

||||

return result.messages

|

||||

.map(message =>

|

||||

textFormatter.formatMessage(message, filePath, sourceCode, functionHashes)

|

||||

)

|

||||

.join('\n\n');

|

||||

};

|

||||

|

||||

textFormatter.run = results => {

|

||||

return results.map(result => textFormatter.formatResult(result)).join('\n\n');

|

||||

};

|

||||

|

||||

module.exports = (results) => textFormatter.run(results);

|

||||

132

index.js

132

index.js

|

|

@ -1,132 +0,0 @@

|

|||

const mythx = require('./mythx')

|

||||

const commandLineArgs = require('command-line-args')

|

||||

|

||||

module.exports = function(embark) {

|

||||

|

||||

let contracts;

|

||||

|

||||

// Register for compilation results

|

||||

embark.events.on("contracts:compiled:solc", (res) => {

|

||||

contracts = res;

|

||||

});

|

||||

|

||||

embark.registerConsoleCommand({

|

||||

description: "Run MythX analysis",

|

||||

matches: (cmd) => {

|

||||

const cmdName = cmd.match(/".*?"|\S+/g)

|

||||

return (Array.isArray(cmdName) &&

|

||||

cmdName[0] === 'verify' &&

|

||||

cmdName[1] != 'help' &&

|

||||

cmdName[1] != 'status' &&

|

||||

cmdName.length >= 1)

|

||||

},

|

||||

usage: "verify [options] [contracts]",

|

||||

process: async (cmd, callback) => {

|

||||

|

||||

const cmdName = cmd.match(/".*?"|\S+/g)

|

||||

// Remove first element, as we know it's the command

|

||||

cmdName.shift()

|

||||

|

||||

let cfg = parseOptions({ "argv": cmdName })

|

||||

|

||||

try {

|

||||

embark.logger.info("Running MythX analysis in background.")

|

||||

const returnCode = await mythx.analyse(contracts, cfg, embark)

|

||||

|

||||

if (returnCode === 0) {

|

||||

return callback(null, "MythX analysis found no vulnerabilities.")

|

||||

} else if (returnCode === 1) {

|

||||

return callback("MythX analysis found vulnerabilities!", null)

|

||||

} else if (returnCode === 2) {

|

||||

return callback("Internal MythX error encountered.", null)

|

||||

} else {

|

||||

return callback(new Error("\nUnexpected Error: return value of `analyze` should be either 0 or 1."), null)

|

||||

}

|

||||

} catch (e) {

|

||||

return callback(e, "ERR: " + e.message)

|

||||

}

|

||||

}

|

||||

})

|

||||

|

||||

embark.registerConsoleCommand({

|

||||

description: "Help",

|

||||

matches: (cmd) => {

|

||||

const cmdName = cmd.match(/".*?"|\S+/g)

|

||||

return (Array.isArray(cmdName) &&

|

||||

(cmdName[0] === 'verify' &&

|

||||

cmdName[1] === 'help'))

|

||||

},

|

||||

usage: "verify help",

|

||||

process: (cmd, callback) => {

|

||||

return callback(null, help())

|

||||

}

|

||||

})

|

||||

|

||||

function help() {

|

||||

return (

|

||||

"Usage:\n" +

|

||||

"\tverify [--full] [--debug] [--limit] [--initial-delay] [<contracts>]\n" +

|

||||

"\tverify status <uuid>\n" +

|

||||

"\tverify help\n" +

|

||||

"\n" +

|

||||

"Options:\n" +

|

||||

"\t--full, -f\t\t\tPerform full rather than quick analysis.\n" +

|

||||

"\t--debug, -d\t\t\tAdditional debug output.\n" +

|

||||

"\t--limit, -l\t\t\tMaximum number of concurrent analyses.\n" +

|

||||

"\t--initial-delay, -i\t\tTime in seconds before first analysis status check.\n" +

|

||||

"\n" +

|

||||

"\t[<contracts>]\t\t\tList of contracts to submit for analysis (default: all).\n" +

|

||||

"\tstatus <uuid>\t\t\tRetrieve analysis status for given MythX UUID.\n" +

|

||||

"\thelp\t\t\t\tThis help.\n"

|

||||

)

|

||||

}

|

||||

|

||||

embark.registerConsoleCommand({

|

||||

description: "Check MythX analysis status",

|

||||

matches: (cmd) => {

|

||||

const cmdName = cmd.match(/".*?"|\S+/g)

|

||||

return (Array.isArray(cmdName) &&

|

||||

cmdName[0] === 'verify' &&

|

||||

cmdName[1] == 'status' &&

|

||||

cmdName.length == 3)

|

||||

},

|

||||

usage: "verify status <uuid>",

|

||||

process: async (cmd, callback) => {

|

||||

const cmdName = cmd.match(/".*?"|\S+/g)

|

||||

|

||||

try {

|

||||

const returnCode = await mythx.getStatus(cmdName[2], embark)

|

||||

|

||||

if (returnCode === 0) {

|

||||

return callback(null, "returnCode: " + returnCode)

|

||||

} else if (returnCode === 1) {

|

||||

return callback()

|

||||

} else {

|

||||

return callback(new Error("Unexpected Error: return value of `analyze` should be either 0 or 1."), null)

|

||||

}

|

||||

} catch (e) {

|

||||

return callback(e, "ERR: " + e.message)

|

||||

}

|

||||

}

|

||||

})

|

||||

|

||||

function parseOptions(options) {

|

||||

const optionDefinitions = [

|

||||

{ name: 'full', alias: 'f', type: Boolean },

|

||||

{ name: 'debug', alias: 'd', type: Boolean },

|

||||

{ name: 'limit', alias: 'l', type: Number },

|

||||

{ name: 'initial-delay', alias: 'i', type: Number },

|

||||

{ name: 'contracts', type: String, multiple: true, defaultOption: true }

|

||||

]

|

||||

|

||||

const parsed = commandLineArgs(optionDefinitions, options)

|

||||

|

||||

if(parsed.full) {

|

||||

parsed.analysisMode = "full"

|

||||

} else {

|

||||

parsed.analysisMode = "quick"

|

||||

}

|

||||

|

||||

return parsed

|

||||

}

|

||||

}

|

||||

|

|

@ -1,416 +0,0 @@

|

|||

'use strict';

|

||||

|

||||

const path = require('path');

|

||||

const assert = require('assert');

|

||||

const SourceMappingDecoder = require('remix-lib/src/sourceMappingDecoder');

|

||||

const srcmap = require('./srcmap');

|

||||

const mythx = require('./mythXUtil');

|

||||

|

||||

const mythx2Severity = {

|

||||

High: 2,

|

||||

Medium: 1,

|

||||

};

|

||||

|

||||

const isFatal = (fatal, severity) => fatal || severity === 2;

|

||||

|

||||

const getUniqueMessages = messages => {

|

||||

const jsonValues = messages.map(m => JSON.stringify(m));

|

||||

const uniuqeValues = jsonValues.reduce((accum, curr) => {

|

||||

if (accum.indexOf(curr) === -1) {

|

||||

accum.push(curr);

|

||||

}

|

||||

return accum;

|

||||

}, []);

|

||||

|

||||

return uniuqeValues.map(v => JSON.parse(v));

|

||||

};

|

||||

|

||||

const calculateErrors = messages =>

|

||||

messages.reduce((acc, { fatal, severity }) => isFatal(fatal , severity) ? acc + 1: acc, 0);

|

||||

|

||||

const calculateWarnings = messages =>

|

||||

messages.reduce((acc, { fatal, severity }) => !isFatal(fatal , severity) ? acc + 1: acc, 0);

|

||||

|

||||

|

||||

const getUniqueIssues = issues =>

|

||||

issues.map(({ messages, ...restProps }) => {

|

||||

const uniqueMessages = getUniqueMessages(messages);

|

||||

const warningCount = calculateWarnings(uniqueMessages);

|

||||

const errorCount = calculateErrors(uniqueMessages);

|

||||

|

||||

return {

|

||||

...restProps,

|

||||

messages: uniqueMessages,

|

||||

errorCount,

|

||||

warningCount,

|

||||

};

|

||||

});

|

||||

|

||||

const keepIssueInResults = function (issue, config) {

|

||||

|

||||

// omit this issue if its severity is below the config threshold

|

||||

if (config.severityThreshold && issue.severity < config.severityThreshold) {

|

||||

return false;

|

||||

}

|

||||

|

||||

// omit this if its swc code is included in the blacklist

|

||||

if (config.swcBlacklist && config.swcBlacklist.includes(issue.ruleId)) {

|

||||

return false;

|

||||

}

|

||||

|

||||

// if an issue hasn't been filtered out by severity or blacklist, then keep it

|

||||

return true;

|

||||

|

||||

};

|

||||

|

||||

|

||||

class MythXIssues {

|

||||

constructor(buildObj, config) {

|

||||

this.issues = [];

|

||||

this.logs = [];

|

||||

this.buildObj = mythx.embark2MythXJSON(buildObj);

|

||||

this.debug = config.debug;

|

||||

this.logger = config.logger;

|

||||

this.sourceMap = this.buildObj.sourceMap;

|

||||

this.sourcePath = buildObj.sourcePath;

|

||||

this.deployedSourceMap = this.buildObj.deployedSourceMap;

|

||||

this.offset2InstNum = srcmap.makeOffset2InstNum(this.buildObj.deployedBytecode);

|

||||

this.contractName = buildObj.contractName;

|

||||

this.sourceMappingDecoder = new SourceMappingDecoder();

|

||||

this.asts = this.mapAsts(this.buildObj.sources);

|

||||

this.lineBreakPositions = this.mapLineBreakPositions(this.sourceMappingDecoder, this.buildObj.sources);

|

||||

}

|

||||

|

||||

setIssues(issueGroups) {

|

||||

for (let issueGroup of issueGroups) {

|

||||

if (issueGroup.sourceType === 'solidity-file' &&

|

||||

issueGroup.sourceFormat === 'text') {

|

||||

const filteredIssues = [];

|

||||

for (const issue of issueGroup.issues) {

|

||||

for (const location of issue.locations) {

|

||||

if (!this.isIgnorable(location.sourceMap)) {

|

||||

filteredIssues.push(issue);

|

||||

}

|

||||

}

|

||||

}

|

||||

issueGroup.issues = filteredIssues;

|

||||

}

|

||||

}

|

||||

const remappedIssues = issueGroups.map(mythx.remapMythXOutput);

|

||||

this.issues = remappedIssues

|

||||

.reduce((acc, curr) => acc.concat(curr), []);

|

||||

issueGroups.forEach(issueGroup => {

|

||||

this.logs = this.logs.concat((issueGroup.meta && issueGroup.meta.logs) || []);

|

||||

});

|

||||

}

|

||||

|

||||

mapLineBreakPositions(decoder, sources) {

|

||||

const result = {};

|

||||

|

||||

Object.entries(sources).forEach(([ sourcePath, { source } ]) => {

|

||||

if (source) {

|

||||

result[sourcePath] = decoder.getLinebreakPositions(source);

|

||||

}

|

||||

});

|

||||

|

||||

return result;

|

||||

}

|

||||

|

||||

mapAsts (sources) {

|

||||

const result = {};

|

||||

Object.entries(sources).forEach(([ sourcePath, { ast } ]) => {

|

||||

result[sourcePath] = ast;

|

||||

});

|

||||

|

||||

return result;

|

||||

}

|

||||

|

||||

isIgnorable(sourceMapLocation) {

|

||||

const basename = path.basename(this.sourcePath);

|

||||

if (!( basename in this.asts)) {

|

||||

return false;

|

||||

}

|

||||

const ast = this.asts[basename];

|

||||

const node = srcmap.isVariableDeclaration(sourceMapLocation, ast);

|

||||

if (node && srcmap.isDynamicArray(node)) {

|

||||

if (this.debug) {

|

||||

// this might brealk if logger is none.

|

||||

const logger = this.logger || console;

|

||||

logger.log('**debug: Ignoring Mythril issue around ' +

|

||||

'dynamically-allocated array.');

|

||||

}

|

||||

return true;

|

||||

} else {

|

||||

return false;

|

||||

}

|

||||

}

|

||||

|

||||

byteOffset2lineColumn(bytecodeOffset, lineBreakPositions) {

|

||||

const instNum = this.offset2InstNum[bytecodeOffset];

|

||||

const sourceLocation = this.sourceMappingDecoder.atIndex(instNum, this.deployedSourceMap);

|

||||

assert(sourceLocation, 'sourceMappingDecoder.atIndex() should not return null');

|

||||

const loc = this.sourceMappingDecoder

|

||||

.convertOffsetToLineColumn(sourceLocation, lineBreakPositions || []);

|

||||

|

||||

if (loc.start) {

|

||||

loc.start.line++;

|

||||

}

|

||||

if (loc.end) {

|

||||

loc.end.line++;

|

||||

}

|

||||

|

||||

const start = loc.start || { line: -1, column: 0 };

|

||||

const end = loc.end || {};

|

||||

|

||||

return [start, end];

|

||||

}

|

||||

|

||||

textSrcEntry2lineColumn(srcEntry, lineBreakPositions) {

|

||||

const ary = srcEntry.split(':');

|

||||

const sourceLocation = {

|

||||

length: parseInt(ary[1], 10),

|

||||

start: parseInt(ary[0], 10),

|

||||

};

|

||||

const loc = this.sourceMappingDecoder

|

||||

.convertOffsetToLineColumn(sourceLocation, lineBreakPositions || []);

|

||||

if (loc.start) {

|

||||

loc.start.line++;

|

||||

}

|

||||

if (loc.end) {

|

||||

loc.end.line++;

|

||||

}

|

||||

return [loc.start, loc.end];

|

||||

}

|

||||

|

||||

issue2EsLint(issue, spaceLimited, sourceFormat, sourceName) {

|

||||

const esIssue = {

|

||||

fatal: false,

|

||||

ruleId: issue.swcID,

|

||||

message: spaceLimited ? issue.description.head : `${issue.description.head} ${issue.description.tail}`,

|

||||

severity: mythx2Severity[issue.severity] || 1,

|

||||

mythXseverity: issue.severity,

|

||||

line: -1,

|

||||

column: 0,

|

||||

endLine: -1,

|

||||

endCol: 0,

|

||||

};

|

||||

|

||||

let startLineCol, endLineCol;

|

||||

const lineBreakPositions = this.lineBreakPositions[sourceName];

|

||||

|

||||

if (sourceFormat === 'evm-byzantium-bytecode') {

|

||||

// Pick out first byteCode offset value

|

||||

const offset = parseInt(issue.sourceMap.split(':')[0], 10);

|

||||

[startLineCol, endLineCol] = this.byteOffset2lineColumn(offset, lineBreakPositions);

|

||||

} else if (sourceFormat === 'text') {

|

||||

// Pick out first srcEntry value

|

||||

const srcEntry = issue.sourceMap.split(';')[0];

|

||||

[startLineCol, endLineCol] = this.textSrcEntry2lineColumn(srcEntry, lineBreakPositions);

|

||||

}

|

||||

if (startLineCol) {

|

||||

esIssue.line = startLineCol.line;

|

||||

esIssue.column = startLineCol.column;

|

||||

esIssue.endLine = endLineCol.line;

|

||||

esIssue.endCol = endLineCol.column;

|

||||

}

|

||||

|

||||

return esIssue;

|

||||

}

|

||||

|

||||

convertMythXReport2EsIssue(report, config, spaceLimited) {

|

||||

const { issues, sourceFormat, source } = report;

|

||||

const result = {

|

||||

errorCount: 0,

|

||||

warningCount: 0,

|

||||

fixableErrorCount: 0,

|

||||

fixableWarningCount: 0,

|

||||

filePath: source,

|

||||

};

|

||||

const sourceName = path.basename(source);

|

||||

result.messages = issues

|

||||

.map(issue => this.issue2EsLint(issue, spaceLimited, sourceFormat, sourceName))

|

||||

.filter(issue => keepIssueInResults(issue, config));

|

||||

|

||||

result.warningCount = result.messages.reduce((acc, { fatal, severity }) =>

|

||||

!isFatal(fatal , severity) ? acc + 1: acc, 0);

|

||||

|

||||

result.errorCount = result.messages.reduce((acc, { fatal, severity }) =>

|

||||

isFatal(fatal , severity) ? acc + 1: acc, 0);

|

||||

|

||||

return result;

|

||||

}

|

||||

|

||||

getEslintIssues(config, spaceLimited = false) {

|

||||

return this.issues.map(report => this.convertMythXReport2EsIssue(report, config, spaceLimited));

|

||||

}

|

||||

}

|

||||

|

||||

function doReport(config, objects, errors, notAnalyzedContracts) {

|

||||

let ret = 0;

|

||||

|

||||

// Return true if we shold show log.

|

||||

// Ignore logs with log.level "info" unless the "debug" flag

|

||||

// has been set.

|

||||

function showLog(log) {

|

||||

return config.debug || (log.level !== 'info');

|

||||

}

|

||||

|

||||

// Return 1 if vulnerabilities were found.

|

||||

objects.forEach(ele => {

|

||||

ele.issues.forEach(ele => {

|

||||

ret = ele.issues.length > 0 ? 1 : ret;

|

||||

})

|

||||

})

|

||||

|

||||

const spaceLimited = ['tap', 'markdown', 'json'].indexOf(config.style) === -1;

|

||||

const eslintIssues = objects

|

||||

.map(obj => obj.getEslintIssues(config, spaceLimited))

|

||||

.reduce((acc, curr) => acc.concat(curr), []);

|

||||

|

||||

// FIXME: temporary solution until backend will return correct filepath and output.

|

||||

const eslintIssuesByBaseName = groupEslintIssuesByBasename(eslintIssues);

|

||||

|

||||

const uniqueIssues = getUniqueIssues(eslintIssuesByBaseName);

|

||||

printSummary(objects, uniqueIssues, config.logger);

|

||||

const formatter = getFormatter(config.style);

|

||||

const report = formatter(uniqueIssues);

|

||||

config.logger.info(report);

|

||||

|

||||

const logGroups = objects.map(obj => { return {'sourcePath': obj.sourcePath, 'logs': obj.logs, 'uuid': obj.uuid};})

|

||||

.reduce((acc, curr) => acc.concat(curr), []);

|

||||

|

||||

let haveLogs = false;

|

||||

logGroups.some(logGroup => {

|

||||

logGroup.logs.some(log => {

|

||||

if (showLog(log)) {

|

||||

haveLogs = true;

|

||||

return;

|

||||

}

|

||||

});

|

||||

if(haveLogs) return;

|

||||

});

|

||||

|

||||

if (haveLogs) {

|

||||

ret = 1;

|

||||

config.logger.info('MythX Logs:');

|

||||

logGroups.forEach(logGroup => {

|

||||

config.logger.info(`\n${logGroup.sourcePath}`.yellow);

|

||||

config.logger.info(`UUID: ${logGroup.uuid}`.yellow);

|

||||

logGroup.logs.forEach(log => {

|

||||

if (showLog(log)) {

|

||||

config.logger.info(`${log.level}: ${log.msg}`);

|

||||

}

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

if (errors.length > 0) {

|

||||

ret = 2;

|

||||

config.logger.error('Internal MythX errors encountered:'.red);

|

||||

errors.forEach(err => {

|

||||

config.logger.error(err.error || err);

|

||||

if (config.debug > 1 && err.stack) {

|

||||

config.logger.info(err.stack);

|

||||

}

|

||||

});

|

||||

}

|

||||

|

||||

return ret;

|

||||

}

|

||||

|

||||

function printSummary(objects, uniqueIssues, logger) {

|

||||

if (objects && objects.length) {

|

||||

logger.info('\nMythX Report Summary'.underline.bold);

|

||||

|

||||

const groupBy = 'groupId';

|

||||

const groups = objects.reduce((accum, curr) => {

|

||||

const issue = uniqueIssues.find((issue) => issue.filePath === curr.buildObj.mainSource);

|

||||

const issueCount = issue.errorCount + issue.warningCount;

|

||||

const marking = issueCount > 0 ? '✖'.red : '✔︎'.green;

|

||||

(accum[curr[groupBy]] = accum[curr[groupBy]] || []).push(` ${marking} ${issue.filePath.cyan}: ${issueCount} issues ${curr.uuid.dim.bold}`);

|

||||

return accum;

|

||||

}, {});

|

||||

|

||||

let count = 0;

|

||||

Object.keys(groups).forEach((groupId) => {

|

||||

logger.info(` ${++count}. Group ${groupId.bold.dim}:`);

|

||||

Object.values(groups[groupId]).forEach((contract) => {

|

||||

logger.info(contract);

|

||||

});

|

||||

});

|

||||

}

|

||||

}

|

||||

|

||||

function getFormatter(style) {

|

||||

const formatterName = style || 'stylish';

|

||||

try {

|

||||

const frmtr = require(`eslint/lib/formatters/${formatterName}`);

|

||||

return frmtr

|

||||

} catch (ex) {

|

||||

ex.message = `\nThere was a problem loading formatter option: ${style} \nError: ${

|

||||

ex.message

|

||||

}`;

|

||||

throw ex;

|

||||

}

|

||||

}

|

||||

|

||||

const groupEslintIssuesByBasename = issues => {

|

||||

const path = require('path');

|

||||

const mappedIssues = issues.reduce((accum, issue) => {

|

||||

const {

|

||||

errorCount,

|

||||

warningCount,

|

||||

fixableErrorCount,

|

||||

fixableWarningCount,

|

||||

filePath,

|

||||

messages,

|

||||

} = issue;

|

||||

|

||||

const basename = path.basename(filePath);

|

||||

if (!accum[basename]) {

|

||||

accum[basename] = {

|

||||

errorCount: 0,

|

||||

warningCount: 0,

|

||||

fixableErrorCount: 0,

|

||||

fixableWarningCount: 0,

|

||||

filePath: filePath,

|

||||

messages: [],

|

||||

};

|

||||

}

|

||||

accum[basename].errorCount += errorCount;

|

||||

accum[basename].warningCount += warningCount;

|

||||

accum[basename].fixableErrorCount += fixableErrorCount;

|

||||

accum[basename].fixableWarningCount += fixableWarningCount;

|

||||

accum[basename].messages = accum[basename].messages.concat(messages);

|

||||

return accum;

|

||||

}, {});

|

||||

|

||||

const issueGroups = Object.values(mappedIssues);

|

||||

for (const group of issueGroups) {

|

||||

group.messages = group.messages.sort(function(mess1, mess2) {

|

||||

return compareMessLCRange(mess1, mess2);

|

||||

});

|

||||

|

||||

}

|

||||

return issueGroups;

|

||||

};

|

||||

|

||||

function compareMessLCRange(mess1, mess2) {

|

||||

const c = compareLineCol(mess1.line, mess1.column, mess2.line, mess2.column);

|

||||

return c != 0 ? c : compareLineCol(mess1.endLine, mess1.endCol, mess2.endLine, mess2.endCol);

|

||||

}

|

||||

|

||||

function compareLineCol(line1, column1, line2, column2) {

|

||||

return line1 === line2 ?

|

||||

(column1 - column2) :

|

||||

(line1 - line2);

|

||||

}

|

||||

|

||||

module.exports = {

|

||||

MythXIssues,

|

||||

keepIssueInResults,

|

||||

getUniqueIssues,

|

||||

getUniqueMessages,

|

||||

isFatal,

|

||||

doReport

|

||||

};

|

||||

185

lib/mythXUtil.js

185

lib/mythXUtil.js

|

|

@ -1,185 +0,0 @@

|

|||

'use strict';

|

||||

|

||||

const armlet = require('armlet')

|

||||

const fs = require('fs')

|

||||

const util = require('util');

|

||||

const srcmap = require('./srcmap');

|

||||

|

||||

const getContractFiles = directory => {

|

||||

let files = fs.readdirSync(directory)

|

||||

files = files.filter(f => f !== "ENSRegistry.json" && f !== "FIFSRegistrar.json" && f !== "Resolver.json");

|

||||

return files.map(f => path.join(directory, f))

|

||||

};

|

||||

|

||||

function getFoundContractNames(contracts, contractNames) {

|

||||

let foundContractNames = [];

|

||||

contracts.forEach(({ contractName }) => {

|

||||

if (contractNames && contractNames.indexOf(contractName) < 0) {

|

||||

return;

|

||||

}

|

||||

foundContractNames.push(contractName);

|

||||

});

|

||||

return foundContractNames;

|

||||

}

|

||||

|

||||

const getNotFoundContracts = (allContractNames, foundContracts) => {

|

||||

if (allContractNames) {

|

||||

return allContractNames.filter(function(i) {return foundContracts.indexOf(i) < 0;});

|

||||

} else {

|

||||

return [];

|

||||

}

|

||||

}

|

||||

|

||||

const buildRequestData = contractObjects => {

|

||||

|

||||

const { sources, compiler } = contractObjects;

|

||||

let allContracts = [];

|

||||

|

||||

const allSources = Object.entries(sources).reduce((accum, [sourcePath, data]) => {

|

||||

const source = fs.readFileSync(sourcePath, 'utf8')

|

||||

const { ast, legacyAST } = data;

|

||||

const key = path.basename(sourcePath);

|

||||

accum[key] = { ast, legacyAST, source };

|

||||

return accum;

|

||||

}, {});

|

||||

|

||||

Object.keys(contractObjects.contracts).forEach(function(fileKey, index) {

|

||||

const contractFile = contractObjects.contracts[fileKey];

|

||||

|

||||

Object.keys(contractFile).forEach(function(contractKey, index) {

|

||||

const contractJSON = contractFile[contractKey];

|

||||

const sourcesToInclude = Object.keys(JSON.parse(contractJSON.metadata).sources);

|

||||

const sourcesFiltered = Object.entries(allSources).filter(([filename, { ast }]) => sourcesToInclude.includes(ast.absolutePath));

|

||||

const sources = {};

|

||||

sourcesFiltered.forEach(([key, value]) => {

|

||||

sources[key] = value;

|

||||

});

|

||||

const contract = {

|

||||

contractName: contractKey,

|

||||

bytecode: contractJSON.evm.bytecode.object,

|

||||

deployedBytecode: contractJSON.evm.deployedBytecode.object,

|

||||

sourceMap: contractJSON.evm.bytecode.sourceMap,

|

||||

deployedSourceMap: contractJSON.evm.deployedBytecode.sourceMap,

|

||||

sources,

|

||||

sourcePath: fileKey

|

||||

};

|

||||

|

||||

allContracts = allContracts.concat(contract);

|

||||

});

|

||||

});

|

||||

|

||||

return allContracts;

|

||||

};

|

||||

|

||||

const embark2MythXJSON = function(embarkJSON, toolId = 'embark-mythx') {

|

||||

let {

|

||||

contractName,

|

||||

bytecode,

|

||||

deployedBytecode,

|

||||

sourceMap,

|

||||

deployedSourceMap,

|

||||

sourcePath,

|

||||

sources

|

||||

} = embarkJSON;

|

||||

|

||||

const sourcesKey = path.basename(sourcePath);

|

||||

|

||||

let sourceList = [];

|

||||

for(let key in sources) {

|

||||

sourceList.push(sources[key].ast.absolutePath);

|

||||

}

|

||||

|

||||

const mythXJSON = {

|

||||

contractName,

|

||||

bytecode,

|

||||

deployedBytecode,

|

||||

sourceMap,

|

||||

deployedSourceMap,

|

||||

mainSource: sourcesKey,

|

||||

sourceList: sourceList,

|

||||

sources,

|

||||

toolId

|

||||

}

|

||||

|

||||

return mythXJSON;

|

||||

};

|

||||

|

||||

const remapMythXOutput = mythObject => {

|

||||

const mapped = mythObject.sourceList.map(source => ({

|

||||

source,

|

||||

sourceType: mythObject.sourceType,

|

||||

sourceFormat: mythObject.sourceFormat,

|

||||

issues: [],

|

||||

}));

|

||||

|

||||

if (mythObject.issues) {

|

||||

mythObject.issues.forEach(issue => {

|

||||

issue.locations.forEach(({ sourceMap }) => {

|

||||

let sourceListIndex = sourceMap.split(':')[2];

|

||||

if (sourceListIndex === -1) {

|

||||

// FIXME: We need to decide where to attach issues

|

||||

// that don't have any file associated with them.

|

||||

// For now we'll pick 0 which is probably the main starting point

|

||||

sourceListIndex = 0;

|

||||

}

|

||||

mapped[0].issues.push({

|

||||

swcID: issue.swcID,

|

||||

swcTitle: issue.swcTitle,

|

||||

description: issue.description,

|

||||

extra: issue.extra,

|

||||

severity: issue.severity,

|

||||

sourceMap,

|

||||

});

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

return mapped;

|

||||

};

|

||||

|

||||

const cleanAnalyzeDataEmptyProps = (data, debug, logger) => {

|

||||

const { bytecode, deployedBytecode, sourceMap, deployedSourceMap, ...props } = data;

|

||||

const result = { ...props };

|

||||

|

||||

const unusedFields = [];

|

||||

|

||||

if (bytecode && bytecode !== '0x') {

|

||||

result.bytecode = bytecode;

|

||||

} else {

|

||||

unusedFields.push('bytecode');

|

||||

}

|

||||

|

||||

if (deployedBytecode && deployedBytecode !== '0x') {

|

||||

result.deployedBytecode = deployedBytecode;

|

||||

} else {

|

||||

unusedFields.push('deployedBytecode');

|

||||

}

|

||||

|

||||

if (sourceMap) {

|

||||

result.sourceMap = sourceMap;

|

||||

} else {

|

||||

unusedFields.push('sourceMap');

|

||||

}

|

||||

|

||||

if (deployedSourceMap) {

|

||||

result.deployedSourceMap = deployedSourceMap;

|

||||

} else {

|

||||

unusedFields.push('deployedSourceMap');

|

||||

}

|

||||

|

||||

if (debug && unusedFields.length > 0) {

|

||||

logger.debug(`${props.contractName}: Empty JSON data fields from compilation - ${unusedFields.join(', ')}`);

|

||||

}

|

||||

|

||||

return result;

|

||||

}

|

||||

|

||||

module.exports = {

|

||||

remapMythXOutput,

|

||||

embark2MythXJSON,

|

||||

buildRequestData,

|

||||

getNotFoundContracts,

|

||||

getFoundContractNames,

|

||||

getContractFiles,

|

||||

cleanAnalyzeDataEmptyProps

|

||||

}

|

||||

|

|

@ -1,78 +0,0 @@

|

|||

'use strict';

|

||||

|

||||

const assert = require('assert');

|

||||

const remixUtil = require('remix-lib/src/util');

|

||||

const SourceMappingDecoder = require('../compat/remix-lib/sourceMappingDecoder.js');

|

||||

const opcodes = require('remix-lib/src/code/opcodes');

|

||||

|

||||

module.exports = {

|

||||

isVariableDeclaration: function (srcmap, ast) {

|

||||

const sourceMappingDecoder = new SourceMappingDecoder();

|

||||